Differential Privacy in Large Language Model Training: Benefits and Tradeoffs

Jan, 15 2026

Jan, 15 2026

When you train a large language model on real human text-medical records, chat logs, emails, reviews-you’re not just teaching it grammar. You’re feeding it the raw stuff of people’s lives. And if you’re not careful, the model can remember it. Not in the way you remember your birthday, but in a way that lets an attacker pull out someone’s full name, Social Security number, or private diagnosis just by asking the right questions. That’s where differential privacy comes in. It doesn’t promise perfect secrecy. It promises something better: a mathematically proven shield against exactly that kind of leak.

What Differential Privacy Actually Does

Differential privacy isn’t about hiding data. It’s about hiding the impact of any single person’s data. Think of it like this: if you add one more person’s medical note to a dataset, how much does the model’s output change? Differential privacy says: it shouldn’t change much. Not enough for someone to tell whether that person was even in the training set. This is done by adding just enough random noise to the training process-specifically, to the gradients that update the model’s weights. The technique is called Differentially Private Stochastic Gradient Descent (DP-SGD). It works by clipping each individual gradient to a maximum size, then adding Gaussian noise before averaging them across the batch. The result? The model learns patterns from the crowd, not details from individuals. The magic is in the numbers: epsilon (ε) and delta (δ). Epsilon is your privacy budget. Lower ε means tighter privacy. ε=1 is strong. ε=8 is weak. Delta is the chance that the privacy guarantee breaks. You want δ close to zero-like 1 in a million. Together, they give you a measurable guarantee: even if an attacker knows everything else about you, they can’t tell with confidence whether your data was used to train the model.Why It Matters for Large Language Models

LLMs are greedy. They memorize. A 2023 study showed that GPT-3 could regurgitate verbatim snippets from training data-including private emails and medical notes-when prompted just right. That’s not a bug. It’s a feature of how they learn. And it’s a legal nightmare under GDPR, HIPAA, or CCPA. Differential privacy fixes this at the source. Instead of trying to scrub data after the fact (which rarely works), it builds privacy into the learning process. Google, for example, now uses DP-SGD to train synthetic data pipelines that power their LLMs. The result? Models that still answer questions about climate science or legal procedures, but can’t spit out your cousin’s therapy session. This isn’t theoretical. A healthcare startup in Oregon used differential privacy to train a clinical note summarization model with ε=4.2. It met HIPAA requirements. It kept 89% of the original model’s accuracy on medical terminology. That’s not just compliance-it’s usable AI.The Cost: Accuracy, Speed, and Memory

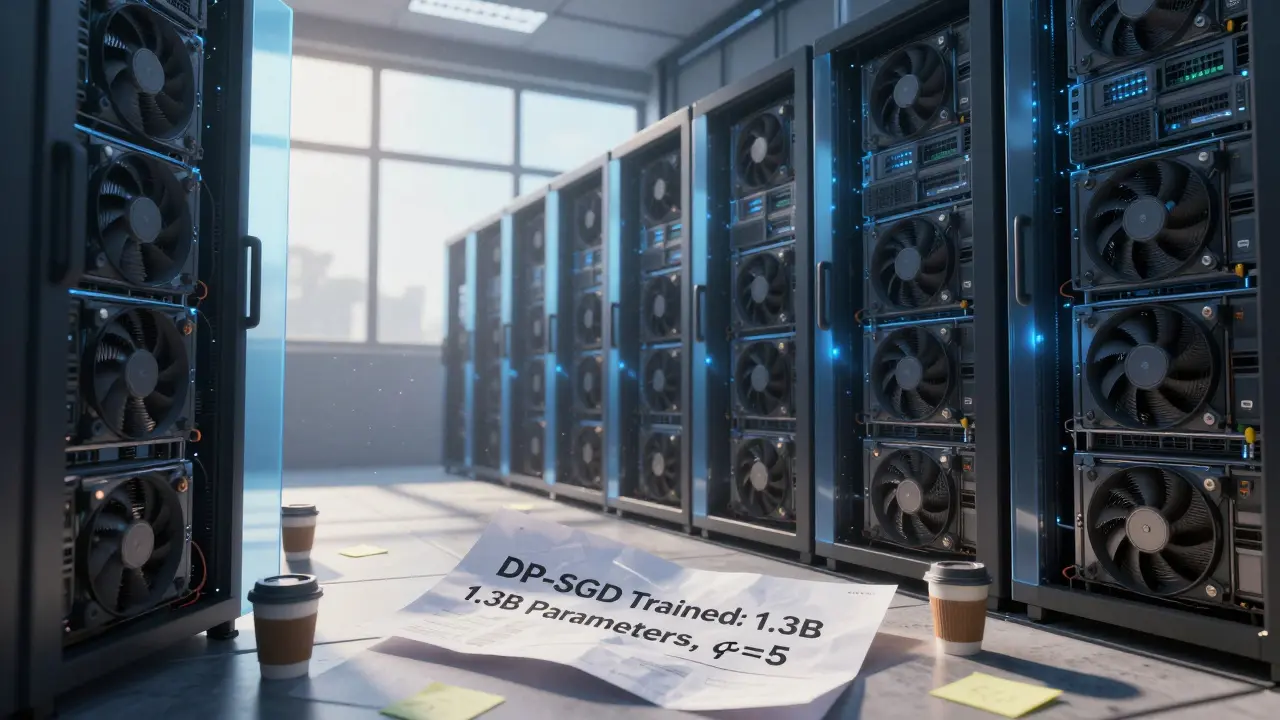

There’s no free lunch. Adding noise means the model learns slower and less precisely. At ε=3, most LLMs lose 5-15% accuracy on standard benchmarks like GLUE or SuperGLUE. That’s the difference between answering 90% of questions correctly and 78%. For customer service bots, that’s acceptable. For diagnostic assistants, it’s risky. Training time? It balloons. DP-SGD can’t use batched gradients efficiently-it has to compute gradients for each training example individually. That kills GPU parallelism. Training a 7B-parameter model with ε=5 can take 2-3 times longer than the non-private version. One team on Reddit reported needing 8 A100 GPUs for 14 days just to get ε=5 on a 1.3B model. Memory usage jumps too. DP-SGD stores per-sample gradients, which can increase memory needs by 20-40%. That means you need bigger hardware-or fewer parameters. And tuning it? That’s a black art. You’ve got to pick clipping norms (usually 0.1-1.0), noise multipliers (0.5-2.0), and microbatch sizes. Mess up one, and your privacy guarantee breaks. Developers spend weeks just finding settings that don’t crash the model or make it useless.

How It Compares to Other Methods

People used to try anonymizing data-removing names, emails, IDs. That’s called heuristic anonymization. It sounds smart. It’s not. A 2020 study showed that even after scrubbing, attackers could re-identify individuals in medical text datasets with 80% accuracy using just a few auxiliary facts (like zip code + diagnosis + age). Differential privacy doesn’t rely on assumptions. It doesn’t care what the attacker knows. The math holds. Other methods like federated learning or homomorphic encryption help in some cases, but they’re not built for LLM-scale training. Federated learning works for mobile devices, not billion-parameter models. Homomorphic encryption is too slow for real-world use. Differential privacy is the only one that scales and comes with provable guarantees.Real-World Implementation Challenges

You can’t just plug in a library and call it done. Google’s DP library has solid docs. Opacus, the popular PyTorch tool, doesn’t. Users on GitHub complain that Opacus doesn’t support model sharding for models over 1B parameters. That means you can’t train large LLMs with it without rewriting core parts of DeepSpeed or Hugging Face’s Trainer. Privacy accounting is another hidden cost. You have to track how much privacy budget you’ve spent across every training run, fine-tuning step, and evaluation. One engineer told me he spent half his time just verifying his privacy ledger wasn’t leaking. Tools like the Privacy Ledger help-but they’re not beginner-friendly. Community support? Thin. The r/DiffPriv subreddit has 1,200 members. Most ML engineers have never touched DP-SGD. The learning curve is 40-60 hours just to understand how to set ε and δ without breaking everything.Who’s Using It-and Why

Adoption is climbing fast. The global market for differential privacy is projected to hit $1.87 billion by 2027. Why? Regulation. Healthcare leads: 42% of implementations. HIPAA demands that patient data can’t be reconstructed from AI outputs. Financial services follow: 28%. Banks can’t risk leaking credit histories or transaction patterns. Cloud providers are catching up. Google Cloud, AWS SageMaker, and Azure all now offer built-in DP tools. Pricing? Around $0.05-$0.20 per extra hour of training. For enterprises, that’s a drop in the bucket compared to the cost of a data breach. Enterprise teams use ε=3-5 for production. Researchers push to ε=1-2 to test limits. The European Data Protection Board explicitly says DP with ε≤2 can help meet GDPR requirements. That’s not a suggestion-it’s a legal pathway.

The Future: Can It Scale?

The biggest roadblock isn’t math. It’s scale. Training a trillion-parameter model with DP-SGD? Right now, it’s impossible. The memory and compute demands explode. But new tools are changing that. DP-ZeRO, developed by Bu et al. in 2023, combines differential privacy with DeepSpeed’s memory sharding. Suddenly, 7B+ parameter models can be trained privately. Google’s 2023 paper showed private fine-tuning of 11B models using synthetic data pipelines. Next up? Tighter composition theorems (2024-2025) that let you reuse privacy budget across multiple steps without draining it. Hardware acceleration for private ML is in early R&D. Stanford researchers warn we need algorithmic breakthroughs before trillion-parameter models become feasible. But the direction is clear. Gartner predicts 65% of enterprise LLM deployments in regulated industries will use differential privacy by 2026. It’s not optional anymore. It’s infrastructure.When Not to Use It

Differential privacy isn’t for every use case. If your model needs to recall rare facts-like a specific drug dosage or a legal statute-it might fail. Noise blurs the edges. If you’re building a trivia bot or a legal research assistant that must cite exact sources, DP might hurt too much. It also doesn’t stop all attacks. Membership inference attacks-where an attacker tries to guess if your data was used-can still work, especially if the model is overconfident. Differential privacy reduces the risk, but doesn’t eliminate it. And if you’re a small team with limited compute? Start with ε=8. Get the model working. Then tighten. Don’t start at ε=1 and give up after a week.Final Thought: It’s Not Perfect. But It’s the Best We Have.

Differential privacy isn’t magic. It’s math. And math doesn’t lie. It’s the only privacy guarantee that’s survived 15 years of attacks by cryptographers, hackers, and academics. As Google’s Ilya Mironov said, it’s the only one that still stands. It’s not a silver bullet. But for LLMs trained on human data? It’s the only bullet that matters. You can’t train powerful language models on real data and ignore privacy. The risks are too high. The regulations are too strict. Differential privacy gives you a way forward-slow, expensive, complex-but grounded in science, not hope. Start with ε=8. Measure your accuracy drop. Track your training time. Learn the tools. Then decide: is your model worth the cost? If the answer is yes, you’re not just building AI. You’re building trustworthy AI.What is epsilon (ε) in differential privacy?

Epsilon (ε) is the privacy budget that controls how much the model’s output can change when one person’s data is added or removed. Lower values (like ε=1) mean stronger privacy but lower model accuracy. Higher values (like ε=8) mean weaker privacy but better performance. It’s a direct tradeoff: the tighter the privacy, the more the model struggles to learn.

Does differential privacy prevent all privacy leaks?

No. It prevents re-identification and membership inference attacks with high probability, but it doesn’t eliminate all risks. For example, if a model is overconfident in its predictions, attackers might still guess whether someone’s data was in the training set. Differential privacy reduces these risks mathematically, but it’s not a cure-all. It should be part of a broader privacy strategy.

Can I use differential privacy with any LLM framework?

Not easily. Libraries like Google’s DP library and Opacus support DP-SGD, but they’re designed for smaller models. Training billion-parameter LLMs requires modifications to handle memory sharding and distributed training. Tools like DP-ZeRO now integrate with DeepSpeed to make this possible, but it’s not plug-and-play. You’ll need to adapt your training pipeline or use cloud tools like AWS Private Training or Google Cloud DP libraries.

How much does training with differential privacy cost?

Training time increases by 2-3x, and memory use goes up by 20-40%. On cloud platforms, you pay extra for the added compute-typically $0.05 to $0.20 per additional privacy-protected training hour. For enterprises, this is minor compared to legal fines or reputational damage. For researchers or small teams, it can be a major barrier, especially if you’re working with limited GPU resources.

Is differential privacy required by law?

Not explicitly, but it’s the most reliable way to comply. Regulations like GDPR and HIPAA require that personal data not be reconstructable from AI outputs. Differential privacy is the only technique with mathematically provable guarantees that meet this standard. The European Data Protection Board has stated that DP with ε≤2 can serve as a GDPR-compliant mechanism. In practice, regulators treat it as the gold standard.

What’s the best starting point for implementing differential privacy?

Start with ε=8-10. That gives you decent model performance while still offering meaningful privacy. Focus on getting your training pipeline working before tightening privacy. Use tools like Google’s DP library or AWS SageMaker Private Training. Monitor accuracy drop and training time. Only reduce ε if you need stricter compliance or are in a highly regulated industry. Most teams never need to go below ε=5 for production.

Bhavishya Kumar

January 15, 2026 AT 20:16Differential privacy as implemented via DP-SGD represents a rigorous mathematical framework for mitigating membership inference risks in LLM training. The theoretical foundation is sound, particularly when epsilon is constrained below 5 and delta is held at 1e-6 or lower. However, the practical implementation challenges-gradient clipping thresholds, noise scaling, and microbatching-are often underappreciated by practitioners who assume library integration equates to compliance. The empirical accuracy degradation at ε=3 is not merely a technical nuisance; it fundamentally alters model behavior in safety-critical domains. Without rigorous benchmarking against non-private baselines, one risks deploying a model that is both private and useless.

ujjwal fouzdar

January 16, 2026 AT 00:50Let me tell you something profound. We are not training models. We are training ghosts. Every gradient update, every noisy tweak-it’s like whispering secrets into the void and hoping the void doesn’t remember. Differential privacy is the veil. But who are we protecting? The patient? The email sender? Or just the lawyers who will sue us if the AI repeats their divorce details? We’re not solving privacy. We’re performing a ritual. A sacred dance between math and morality. And the math? It’s beautiful. But the morality? That’s just a contract we signed with our own guilt.

Anand Pandit

January 16, 2026 AT 09:22Really glad to see this breakdown-it’s easy to get overwhelmed by the math, but you made it feel human. For teams just starting out, I’d say don’t panic about hitting ε=1 right away. I helped a med-tech startup get their first model up with ε=8 and it was fine for internal triage. Accuracy dropped 8%, but they could still flag high-risk cases. The key is iterative tightening: get feedback, measure real-world impact, then reduce epsilon in small steps. And use AWS SageMaker’s built-in DP-it saves weeks of debugging Opacus quirks. You’ve got this!

Reshma Jose

January 18, 2026 AT 01:49okay so i just tried opacus on my 1.3b model and holy hell it took 11 days on 2 a100s and my accuracy tanked to like 62% and now my boss is asking why we cant just delete the emails?? like i get the theory but the cost?? its insane. also why does it need 40% more memory?? i thought noise was just adding random numbers?? why does that need so much ram?? someone please explain this to me like im 5 and not a grad student

rahul shrimali

January 19, 2026 AT 22:29Just start with ε=8 and get your model working. Don’t overthink it. Train. Test. Measure. Then tighten. DP is not magic, it’s math. And math doesn’t care how you feel. Just do it.

Eka Prabha

January 20, 2026 AT 17:45Let’s be honest: this whole differential privacy push is a distraction. The real issue is that Big Tech doesn’t want to stop hoarding our data. They slap on epsilon noise like a Band-Aid on a bullet wound and call it ‘compliance.’ Meanwhile, the same companies are still scraping our social media, our medical forums, our private messages-all under the guise of ‘publicly available.’ And now they want us to believe that adding Gaussian noise makes it ethical? Please. The only thing this guarantees is that the regulators will stop asking questions while the models still leak. It’s not privacy. It’s performative security. And the fact that governments are treating ε≤2 as a legal shield? That’s not science. That’s surrender.

Bharat Patel

January 21, 2026 AT 08:16There’s a quiet poetry in differential privacy. It doesn’t erase the individual-it acknowledges them. By making their presence statistically indistinguishable, we honor their right to exist in the data without being reduced to a data point. The noise isn’t interference; it’s reverence. The model learns the shape of humanity, not its fingerprints. We’re not building machines that memorize. We’re building machines that respect. And in a world where algorithms are increasingly the arbiters of our lives, isn’t that the least we can ask for?

Bhagyashri Zokarkar

January 22, 2026 AT 14:15i just read this whole thing and now i feel like crying. not because its complicated but because i realized my grandma’s medical records are probably in some model right now and she never even knew she was being used. and now they’re adding noise like its some kind of magic spell and pretending its enough? but what if the model still remembers her diagnosis? what if someone asks it ‘what did jane doe have in 2021’ and it says ‘stage 4 pancreatic’?? and then some insurance company finds out?? and then they deny her coverage?? and i cant even prove it happened because the math says its impossible?? i just want to scream. i dont care about epsilon or delta. i care that my grandma is still in there. and no one is listening.

Rakesh Dorwal

January 24, 2026 AT 04:55Why are we letting foreign tech giants dictate how Indian data is handled? We’re training models on Indian patient records, Indian chat logs, Indian medical histories-and then we’re letting Google and AWS control the privacy tools? This isn’t innovation. It’s digital colonialism. We need our own DP standards. Our own libraries. Our own epsilon values calibrated for Indian demographics. Not some Silicon Valley formula that assumes everyone has the same privacy needs. We don’t need their noise. We need our sovereignty.