Leap Nonprofit AI Hub: Practical AI Tools for Nonprofits

At the heart of this hub is AI for nonprofits, artificial intelligence tools built specifically to help mission-driven organizations scale impact without compromising ethics or compliance. Also known as responsible AI, it’s not about flashy tech—it’s about making tools that work for teams with limited tech staff and tight budgets. Many of the posts here focus on vibe coding, a way for non-developers to build apps using plain language prompts instead of code, letting clinicians, fundraisers, and program managers create custom tools without touching sensitive data. Related to this is LLM ethics, the practice of deploying large language models in ways that avoid bias, protect privacy, and ensure accountability, especially in healthcare and finance. And because data doesn’t stop at borders, AI compliance, following laws like GDPR and the California AI Transparency Act is no longer optional—it’s part of daily operations.

You’ll find guides that cut through the hype: how to reduce AI costs, what security rules non-tech users must follow, and why smaller models often beat bigger ones. No theory without action. No jargon without explanation. Just clear steps for teams that need to do more with less.

What follows are real examples, templates, and hard-won lessons from nonprofits using AI today. No fluff. Just what works.

Why Vibe Coding Is Democratizing Software Creation for New Builders

Vibe coding lets anyone create functional software by describing ideas in plain language, not writing code. AI generates, refines, and improves apps in seconds - democratizing creation for non-developers, artists, entrepreneurs, and learners.

Read MoreContent Lifecycle with Generative AI: Creation, Review, Publish, and Archive

Learn how generative AI transforms content from static files into living assets through a continuous cycle of creation, review, publishing, and archiving-keeping your brand authoritative, visible, and aligned with modern search standards.

Read MoreInput Tokens vs Output Tokens: Why LLM Generation Costs More

Output tokens in LLMs cost 3-8 times more than input tokens because generating responses requires far more computing power. Learn why this pricing exists and how to cut your AI costs by controlling response length and context.

Read MoreRisk Assessment for Generative AI Deployments: Impact, Likelihood, and Controls

Generative AI deployments carry real, measurable risks-from data leaks to regulatory fines. Learn how to assess impact, likelihood, and controls before your next AI rollout.

Read MoreDomain-Specialized LLMs: How Code, Math, and Medicine Models Outperform General AI

Domain-specialized LLMs like CodeLlama, Med-PaLM 2, and MathGLM outperform general AI in code, math, and medicine with higher accuracy, lower costs, and real-world impact. Here's how they work-and why they're changing the game.

Read MoreMigrating Between LLM Providers: How to Avoid Vendor Lock-In in 2026

In 2026, avoiding LLM vendor lock-in means building portable AI systems. Learn how to use open-source models, model-agnostic proxies, and self-hosted infrastructure to cut costs, reduce latency, and stay compliant.

Read MoreReplit for Vibe Coding: Cloud Dev, Agents, and One-Click Deploys

Replit transforms coding into a seamless, AI-powered experience where you build, collaborate, and deploy apps in minutes-no setup required. Perfect for vibe coding, startups, and educators.

Read MoreMarketing the Wins: Telling the Vibe Coding Success Story Internally

Vibe coding lets non-technical teams build real software in weeks-not months-using AI. Learn how internal stories of real wins-from restaurants to marketing teams-are changing how companies think about innovation, speed, and ownership.

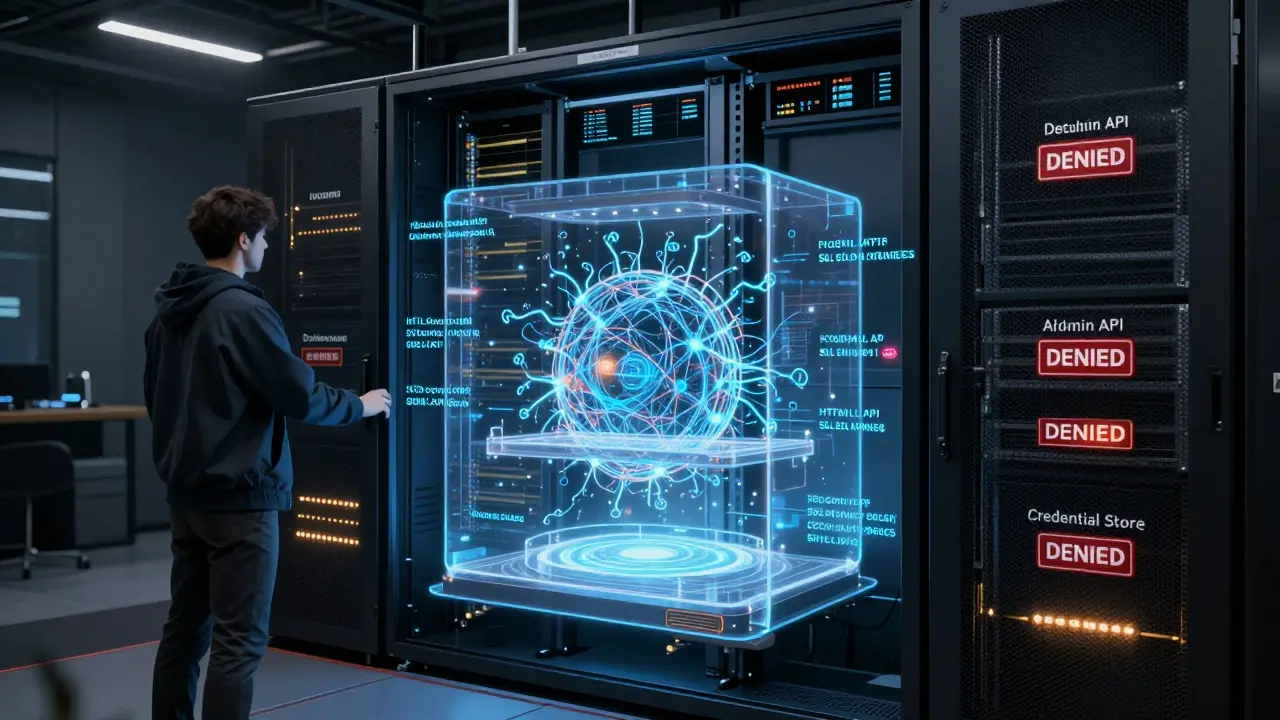

Read MoreFixing Insecure AI Patterns: Sanitization, Encoding, and Least Privilege

AI security isn't about fancy tools-it's about three basics: sanitizing inputs, encoding outputs, and limiting access. Without them, even the smartest models can leak data, inject code, or open backdoors. Here's how to fix it.

Read MoreModel Distillation for Generative AI: Smaller Models with Big Capabilities

Model distillation lets small AI models match the performance of massive ones by learning from their reasoning patterns. Learn how it cuts costs, speeds up responses, and powers real-world AI applications in 2026.

Read MoreMulti-Task Fine-Tuning for Large Language Models: One Model, Many Skills

Multi-task fine-tuning lets one language model handle many tasks at once, boosting performance and cutting costs. Learn how it works, why it outperforms single-task methods, and how companies are using it to build smarter AI.

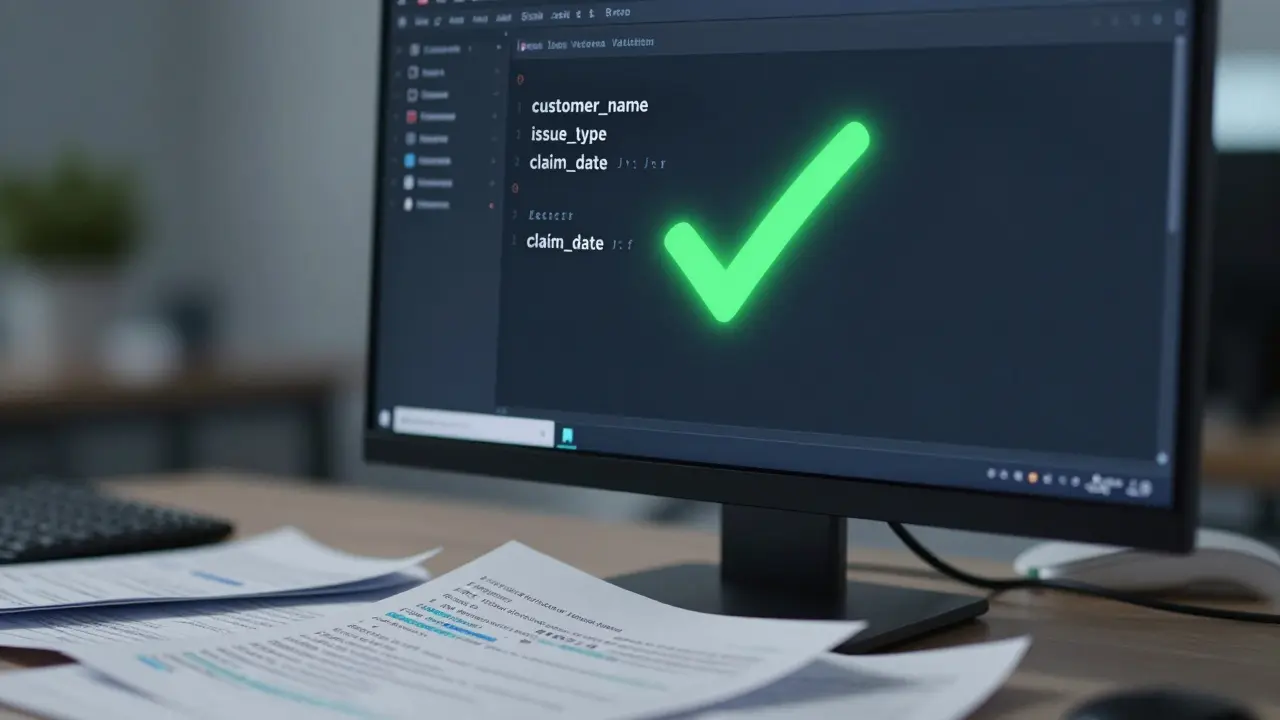

Read MoreStructured Output Generation in Generative AI: How Schemas Stop Hallucinations in Production

Structured output generation uses schemas to force generative AI to return clean, predictable data instead of unreliable text. This eliminates parsing errors, reduces retries, and makes AI usable in production systems - without requiring perfect model accuracy.

Read More