Architecture-First Prompt Templates for Vibe Coding: Build Better Code Faster

Jan, 24 2026

Jan, 24 2026

Most developers using AI coding tools like GitHub Copilot or Claude are stuck in a loop: generate code, fix errors, refactor, repeat. It’s not the AI’s fault-it’s the prompt. If you ask for "a user login system," you’ll get something that works… maybe. But it won’t be clean, secure, or maintainable. That’s where architecture-first prompt templates come in. This isn’t just better prompting-it’s a shift in how professional teams build software with AI.

Why Your AI Code Keeps Failing

You’ve probably tried prompts like: - "Build a REST API for users." - "Make a React frontend with auth." - "Generate a Node.js backend." These feel natural. They’re how you’d explain it to a junior dev. But AI doesn’t think like a human. It doesn’t know your team’s standards, your security policies, or your deployment pipeline. The result? Bloated files, missing tests, unsecured endpoints, and inconsistent structure. A December 2025 benchmark by Rocket.new showed that prompts without architectural details required 68% more iterations before code was deployable. That’s not just wasted time-it’s morale drain. Developers don’t want to clean up AI-generated messes. They want to build.What Makes an Architecture-First Prompt Work

Architecture-first prompting flips the script. Instead of starting with features, you start with structure. Think of it like giving a builder blueprints before asking for drywall. According to Emergent’s March 2025 analysis, every effective architecture-first prompt includes six core elements:- One clear objective-a single sentence that says what the system does. No fluff.

- Features as user actions-not "use JWT" but "users can log in with email and password and stay logged in across devices."

- Requirements as bullet points-clear, scannable, and specific.

- Concrete examples-show input and expected output. Example: "Input: {email: '[email protected]', password: '123'} → Output: {token: 'abc123', user: {id: 5, email: '[email protected]'}}"

- Explicit inputs, outputs, and success conditions-what does "done" look like? A working API? A passing test suite? A deployed container?

- Required integrations-"Use PostgreSQL, not SQLite. Use bcrypt for passwords. Enable CORS for https://app.yourcompany.com."

- Identity: What are you building? A microservice? A CLI tool? A mobile backend?

- Audience: Who uses this? End users? Internal admins? Other services?

- Features: What can they do? List them.

- Aesthetic: How should it feel? "Clean," "fast," "minimalist," "enterprise-grade," "developer-friendly."

Real Example: Bad Prompt vs Architecture-First Prompt

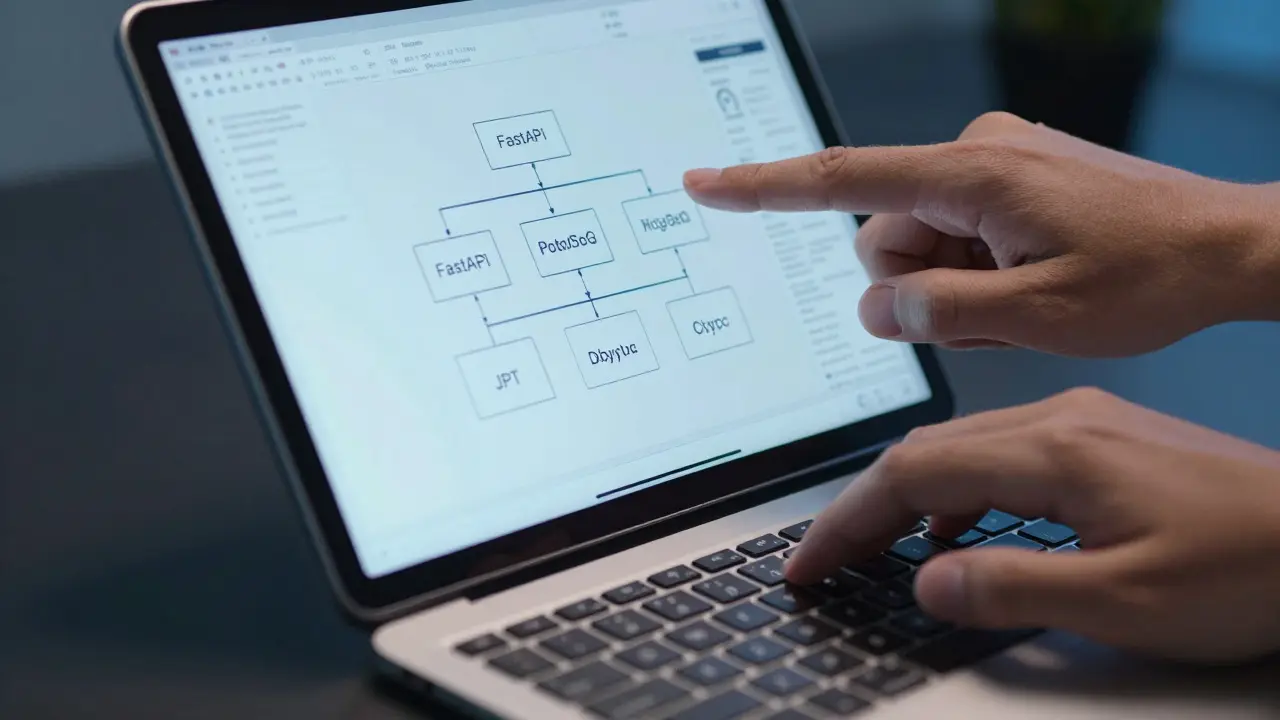

Bad prompt: > "Build a user management system." That’s it. No tech stack. No security rules. No tests. The AI will guess. And it’ll guess wrong. Architecture-first prompt: > "Build a user-management microservice with Python FastAPI and PostgreSQL. Use JWT for authentication. Hash passwords with bcrypt. Validate all inputs to prevent SQL injection. Return JSON responses with CORS enabled for https://app.yourcompany.com. Include unit tests for every endpoint using pytest. Output must include these exact files: app.py, models.py, schemas.py, services.py, and tests/test_users.py. The system must handle 100 concurrent sign-ups without slowing down." The difference isn’t just detail-it’s control. The AI now knows what success looks like. And it’s far more likely to deliver it.The Four Layers of Quality Architecture Prompts

Seroter’s quality-focused prompt framework breaks down what makes architecture-first prompts so effective. Think of them as four layers of a building:- Blueprint & Foundation-Code structure, modularity, naming conventions, folder layout. This is where maintainability lives. If the code is a mess, no one can extend it.

- Walls & Locks-Security: authentication, input validation, encryption, rate limiting. This layer stops breaches before they happen.

- Engine & Plumbing-Performance, logging, monitoring, caching. If the system is slow or crashes silently, users leave.

- Assembly Line-Deployment, CI/CD, environment variables, containerization. This ensures the code goes from your laptop to production without breaking.

Who’s Using This-and Why

This isn’t a fringe trend. Companies like Supabase, Vercel, and Rocket.new are using architecture-first prompting as their default workflow. Why? Because it saves time. - Supabase’s Developer Advocate, Sunil Pai, compares it to cooking: "Asking for ‘food’ vs giving a chef specific ingredients and instructions." - Jason Lengstorf at Vercel says: "Vibe coding without architectural guardrails is like building a house without blueprints-you might end up with something that stands, but it won’t be code you want to maintain." - GitHub’s AI Research Team found that prompts with explicit architecture had 78% higher accuracy in generating correct API implementations. On the ground, developers report real wins: - u/DevWithAI on Reddit said architecture-first prompts cut onboarding time for new team members by 55%. "The prompts serve as living documentation." - KhazP’s GitHub repo, starred over 2,300 times, shows teams using these templates to keep code consistent across 10+ developers.Common Problems-and How to Fix Them

Even the best templates fail if used wrong. Here are the top three issues-and how to solve them:- AI ignores attached documents (38% of cases)

Fix: Start your prompt with: "First, read all attached documents. Confirm you understand them. Then proceed."

- Code doesn’t match requirements (29% of cases)

Fix: Add: "Double-check every requirement in the bullet list above. If any are missing, list them and explain why you didn’t implement them."

- AI overcomplicates the solution (22% of cases)

Fix: Add: "Use the simplest approach that satisfies all requirements. Avoid adding features not listed."

How to Start (Even If You’re New)

You don’t need to be an expert to use this. Here’s a progression:- Beginner: "I’m new to coding. Read NOTES.md and guide me step-by-step to build this MVP. Explain what you’re doing."

- Intermediate: "Read NOTES.md and the docs folder. Build the core features first, test them, then add polish."

- Advanced: "Review NOTES.md and the architecture diagram. Implement Phase 1 using our team’s patterns. Include full test coverage."

The Limits: When Architecture-First Fails

This method isn’t magic. It doesn’t work well for:- Complex algorithm design (e.g., optimizing a machine learning model)

- Highly exploratory projects where the architecture isn’t known yet

The Future: What’s Next

This isn’t the end-it’s the beginning. Here’s what’s coming:- Vibe Units (Rocket.new, Jan 2026): Auto-generates architecture templates based on project type. Saves 65% of setup time.

- Architecture Guardrails (Supabase, Jan 2026): Real-time validation of AI-generated code against your specs.

- CI/CD Integration: Automated tests that check if AI-generated code meets architectural rules before merging.

- AI-Suggested Architecture: Meta AI’s prototype can now recommend optimal architecture based on your project description.

Final Tip: Treat Prompts Like Code

Store your best architecture-first templates in version control. Share them in your team’s repo. Review them weekly. Update them as your stack evolves. The best developers aren’t the ones who write the most code. They’re the ones who write the best prompts.What’s the difference between a regular prompt and an architecture-first prompt?

A regular prompt focuses on features: "Build a login page." An architecture-first prompt adds structure: "Build a login microservice using FastAPI and PostgreSQL. Use bcrypt for passwords, JWT for auth, return JSON with CORS enabled, include pytest tests, and output these five files: app.py, models.py, schemas.py, services.py, and tests/test_auth.py."

Do I need to use a specific AI tool for architecture-first prompting?

No. You can use GitHub Copilot, Claude, Gemini, or any AI coding assistant. The method works because of how you write the prompt-not the tool. But platforms like Rocket.new and Vercel’s v0 now bake these templates into their interfaces, making it easier to get started.

Can architecture-first prompting replace technical documentation?

Not entirely, but it comes close. Architecture-first prompts act as living documentation. When a new developer joins, they can read the prompt and understand the system’s structure, tech stack, security rules, and expectations-all in one place. Many teams now treat the prompt as the primary source of truth for new features.

How do I avoid over-constraining the AI?

Start with the essentials: tech stack, security rules, output files, and success criteria. Avoid dictating implementation details like variable names or exact function logic unless necessary. Let the AI choose the cleanest way to solve the problem within your guardrails. If the AI suggests a better approach, consider it.

Is this only for backend development?

No. It works for frontend, mobile, and even infrastructure. For React, specify: "Use TypeScript, Tailwind CSS, and React Router. Implement dark mode toggle. All components must be unit tested with Jest. Output: App.tsx, Login.tsx, AuthContext.tsx, and tests/Login.test.tsx."

Reshma Jose

January 25, 2026 AT 20:34This changed how I work with Copilot completely. Before, I was spending hours fixing garbage code. Now I just drop in my template and boom - clean, testable, deployable stuff. No more begging the AI to understand my team’s style. It just gets it.

Even my junior devs are shipping better code now. The prompt is basically our living docs. No more ‘what’s the architecture again?’ meetings. Just read the prompt. Done.

rahul shrimali

January 26, 2026 AT 04:29Stop overcomplicating it. Just tell the AI what to build and what not to do. No fluff. No essays. I use one line for stack, one for security, one for output files. Works every time. Less is more.

Eka Prabha

January 26, 2026 AT 07:34Let me guess - this is the same ‘AI will replace engineers’ nonsense dressed up as productivity. You’re handing over your brain to a statistical parrot and calling it ‘architecture’. What happens when the AI hallucinates a backdoor because you didn’t specify ‘don’t use eval’? Or when your CI/CD pipeline breaks because it generated a Dockerfile with root access?

These templates are just a crutch for teams that can’t write code themselves. And now you’re treating prompts like sacred scripture? Next thing you know, we’ll be auditing Git commits for ‘architectural purity’. This isn’t innovation - it’s surrender.

deepak srinivasa

January 27, 2026 AT 09:06Interesting. I’ve been trying this with Claude for frontend stuff - React + TypeScript + Tailwind. But I keep running into this: the AI sometimes ignores the ‘aesthetic’ part. Like it’ll make everything super minimal but then add 12 unused hooks because it thinks ‘developer-friendly’ means ‘more options’. Any tips on how to make it stick to the vibe without over-engineering?

pk Pk

January 28, 2026 AT 03:50Guys, if you’re still writing prompts like ‘build a login’ - you’re leaving money on the table. This isn’t just about code quality, it’s about team velocity. I trained my whole squad on this in a 90-minute workshop. We went from 5 iterations per feature to 1.5. The morale boost? Massive.

Start small. Pick one service. Write the template. Use it. Then share it. Don’t wait to be perfect. Just start. Your future self will thank you.

NIKHIL TRIPATHI

January 29, 2026 AT 14:12I’ve been using this for 6 months now across 3 projects - backend, mobile, and even a CLI tool for internal ops. The biggest win? Onboarding. New hires don’t need a 3-day code walkthrough. They read the prompt, see the structure, the security rules, the file layout - and they’re productive by lunch.

Also, we started versioning our templates in /prompts/ in our repo. We review them every sprint. One guy added ‘use zod for validation’ and it cut input bugs by 70%.

But here’s the thing - don’t treat this like a rigid formula. The AI still surprises you. Last week it suggested using Redis for session caching instead of JWT storage. We hadn’t thought of that. We tried it. It worked better. So now it’s in our template.

It’s not about controlling every detail. It’s about giving the AI the right context so it can be creative within your guardrails. That’s the sweet spot.

And yeah, I agree with Eka - if you’re over-constraining variable names or function logic, you’re killing the AI’s ability to optimize. I learned that the hard way when I made it generate a whole ORM layer because I said ‘use SQLAlchemy’. It did. But it was overkill. Now I just say ‘use an ORM’ and let it pick the lightest one that fits.