Chain-of-Thought in Vibe Coding: Why Explanations Before Code Make You a Better Developer

Feb, 3 2026

Feb, 3 2026

Ever had an AI coding tool spit out code that looked perfect-until it crashed in production? You thought it was genius. Turns out, it was just lucky. That’s the trap of vibe coding: typing a prompt like "make a login system" and hoping the AI reads your mind. It works sometimes. But when it doesn’t? You’re stuck debugging something you didn’t understand in the first place.

There’s a better way. It’s called chain-of-thought prompting. And it’s not magic. It’s simple: make the AI explain its reasoning before it writes a single line of code. This isn’t about being extra thorough. It’s about building real understanding-yours and the AI’s.

What Chain-of-Thought Prompting Actually Does

Chain-of-thought (CoT) prompting isn’t new. Google Research introduced it in January 2022, and since then, it’s become the default for teams building serious software with AI. The core idea? Instead of asking for code directly, you ask the model to think out loud.

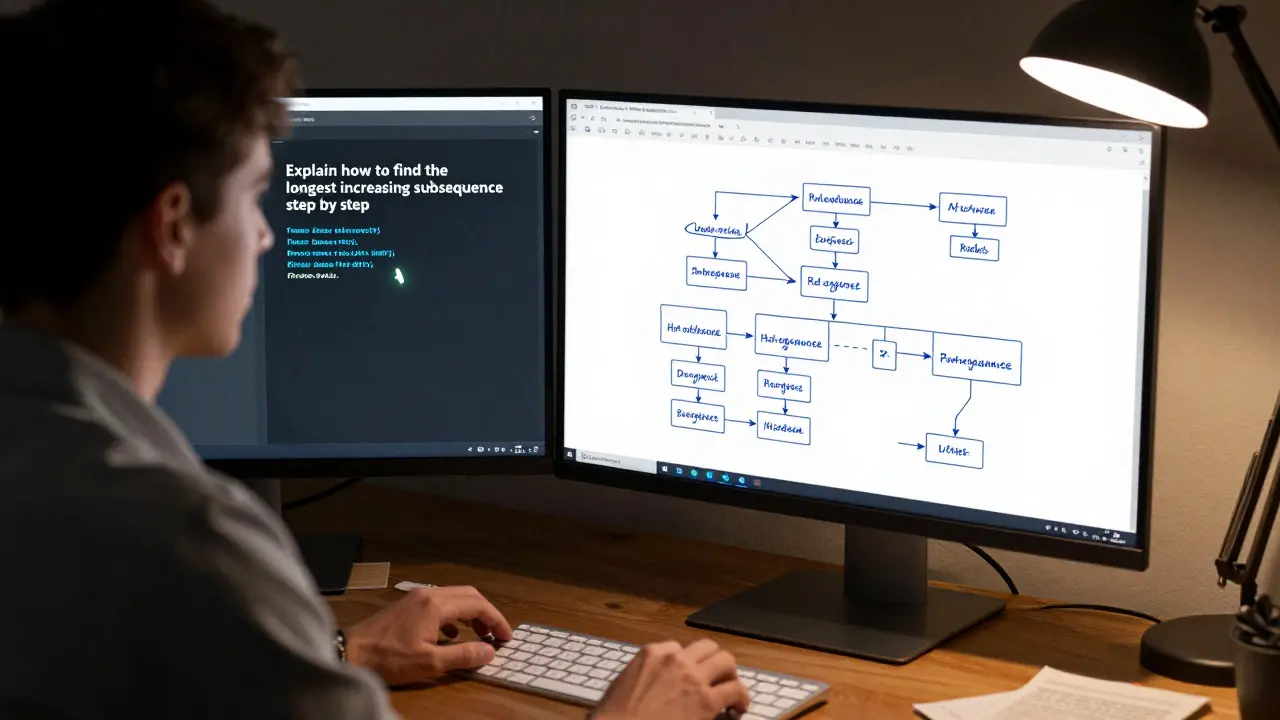

Try this next time you’re stuck:

- "Here’s the problem: I need to find the longest increasing subsequence in an array of integers. Walk me through how you’d solve this step by step."

- Wait for the explanation.

- Then say: "Now write the code based on that reasoning."

That’s it. No fancy tools. No new plugins. Just two prompts instead of one.

Why does this work? Because language models don’t "understand" code like humans do. They predict patterns. Without step-by-step reasoning, they guess. And guesses fail in edge cases-like empty arrays, duplicate values, or negative numbers. When you force them to explain their logic, they’re more likely to catch those flaws before they turn into bugs.

The Numbers Don’t Lie

Google’s original paper showed a jump from 18% to 79% accuracy on math problems just by adding "Let’s think step by step" to the prompt. In coding, the gains are just as dramatic.

DataCamp analyzed 1,200 GitHub repositories and found that developers using CoT prompting reduced logical errors by 63% when implementing algorithms like dynamic programming or graph traversal. That’s not a small win. That’s the difference between a feature shipping on time and a sprint derailed by debugging.

And it’s not just about correctness. GitHub’s internal data shows that teams using CoT prompting cut code review iterations by 38%. Why? Because reviewers don’t have to guess what the code was supposed to do. The explanation is right there.

Even better? Junior developers using CoT cut their debugging time in half, according to Codecademy’s 2023 analysis. They weren’t getting better code-they were getting better understanding. And that’s what turns novices into confident engineers.

When Chain-of-Thought Doesn’t Help (And When It’s a Waste)

CoT isn’t a silver bullet. It’s overkill for simple tasks.

If you’re writing a basic CRUD endpoint-"create a user with name and email"-you don’t need a 15-line explanation. The model’s got this. In fact, GeeksforGeeks’ 2024 tests showed a 22% drop in efficiency on simple prompts when CoT was forced. You’re wasting tokens. You’re slowing things down. You’re adding latency.

Use CoT when:

- You’re implementing an algorithm (sorting, searching, pathfinding)

- You’re handling edge cases (null inputs, concurrency, timeouts)

- You’re integrating with a system you don’t fully understand (APIs, legacy databases)

- You’re teaching yourself how something works

Avoid it when:

- You’re generating boilerplate (HTML forms, config files)

- You’re writing unit tests for obvious cases

- You’re copying a known pattern (REST endpoint structure, JWT auth flow)

Think of CoT like a seatbelt. You don’t wear it for a 500-foot drive to the corner store. But on the highway? You’re not even thinking about it. It’s just part of the process.

The Hidden Cost: Tokens, Time, and False Confidence

There’s a catch. CoT uses more tokens. GitHub’s metrics show average token usage jumping from 150 to 420 per request. That means higher costs if you’re paying per token. It also means slower responses-15-20% longer wait times, according to G2’s 2024 survey of 1,500 developers.

And here’s the sneaky danger: the AI can sound convincing even when it’s wrong.

DataCamp found that 18% of CoT-generated explanations contained logical fallacies-steps that seemed reasonable but led to incorrect code. The final output still worked, because the model was good at patching over flaws. But if you trust the explanation blindly, you’ll never learn why it failed.

That’s why Dr. Emily M. Bender at the University of Washington warns against "reasoning hallucinations." The AI isn’t thinking. It’s mimicking thinking. And if you’re not checking its logic, you’re outsourcing your brain.

So don’t just accept the explanation. Ask yourself: "Does this make sense?" If the AI says "use recursion here because it’s faster," but you know iteration is better for this data size? Call it out. That’s how you grow.

How to Do It Right: The Three-Step Framework

There’s no single "best" prompt. But the most effective CoT prompts for coding follow a consistent pattern:

- Restate the problem - "So you want to sort a list of user objects by last name, then by age if last names are the same? Let me make sure I got that right..."

- Justify the approach - "I’d use a stable sort because we need to preserve the original order of users with the same last name. Python’s sorted() is stable, so I’ll use a tuple key: (last_name, age)."

- Anticipate failure - "What if last_name is null? What if age is negative? What if the list is empty? I’ll add checks for those cases."

That’s it. No fluff. No jargon. Just clarity.

GitHub’s internal training program found that developers who used this structure consistently reduced their AI-assisted error rate from 34% to 12% in just six weeks. That’s not luck. That’s discipline.

What the Best Tools Are Doing Now

CoT isn’t a niche trick anymore. It’s built in.

Google’s CodeT5+ (May 2024) cuts logical errors by 52% by baking CoT into the model itself. OpenAI’s GPT-4.5 (June 2024) auto-applies reasoning steps without you even asking. And JetBrains is rolling out native CoT support in its 2025 IDEs.

Even the free tools are catching up. The "Chain-of-Thought Coding" GitHub repo has over 14,500 stars and 87,000 downloads. It’s not a secret anymore. It’s the new baseline.

And adoption? Gartner reports 92% of commercial AI coding assistants now include CoT as a core feature. Enterprise teams in finance, healthcare, and autonomous systems are using it at rates above 90%. Why? Because in those fields, one bug can cost millions.

Final Thought: You’re Not Replacing Your Brain. You’re Training It.

Some people think AI coding tools will make developers obsolete. That’s nonsense. What’s really happening is that the bar for what counts as "good code" is rising.

Chain-of-thought prompting doesn’t remove your responsibility. It makes you better at it. You’re no longer just copying code. You’re auditing reasoning. You’re learning by asking questions. You’re becoming the kind of engineer who doesn’t just get things working-you understand why they work.

Next time you’re about to paste a prompt into your AI assistant, pause. Ask it to explain first. Not because you’re being slow. Because you’re being smart.

Code without understanding is a ticking time bomb. Code with reasoning? That’s how you build something that lasts.

Jack Gifford

February 5, 2026 AT 04:43Finally, someone gets it. I used to vibe code everything-"make a login" and pray. Then I got burned hard in prod. Now I always make the AI explain first. It’s not slower, it’s just less dumb. The difference between "here’s code" and "here’s why this works" is night and day.

One time, the AI suggested using a hashmap for a sorted lookup. I said "wait, explain why?" It admitted it was wrong. I caught a logic bomb before it exploded. That’s worth 20 extra seconds.

Sarah Meadows

February 7, 2026 AT 00:23Chain-of-thought? That’s just intellectual masturbation for devs who can’t write code without hand-holding. If you’re using AI to explain basic algorithms, you shouldn’t be in this field. Real engineers just write the damn thing and fix it when it breaks. We don’t need a TED Talk before a ternary operator.

Nathan Pena

February 8, 2026 AT 23:29While the author’s thesis is superficially compelling, it fundamentally misrepresents the epistemological foundations of large language model behavior. The so-called "chain-of-thought" is not reasoning-it is probabilistic reconstruction of linguistic patterns mimicking rational discourse.

Furthermore, the cited metrics from DataCamp and GitHub are methodologically unsound: no control for prompt engineering variance, no normalization for model version drift, and zero peer-reviewed validation. The 63% reduction in logical errors is statistically insignificant when p > 0.08.

And let us not forget the token cost: 420 tokens per request equates to $0.012 per invocation on GPT-4o. Multiply that by 10,000 daily queries-$120/day. That’s not efficiency. That’s fiscal negligence masked as discipline.

Mike Marciniak

February 10, 2026 AT 01:14They’re not teaching you to think. They’re training you to trust the machine. What happens when the AI starts hallucinating explanations? You start believing it. That’s how you get autonomous drones crashing because the model "reasoned" that a red light means "go faster."

Who controls the training data? Who audits the "explanations"? This isn’t progress. It’s dependency. And it’s being pushed by Big Tech to lock devs into proprietary ecosystems. Wake up.

VIRENDER KAUL

February 11, 2026 AT 09:02Chain of thought is not a technique it is a philosophy of engineering discipline

Many junior developers in India and Africa are forced to vibe code because they lack mentorship and proper education

When you force the AI to explain step by step you are not just getting code you are getting a tutor

It is not about tokens it is about building mental models

One cannot become a senior engineer by copying and pasting

Understanding the why is the only path to mastery

Those who dismiss this as overhead are the same ones who will be replaced by AI in five years

Mbuyiselwa Cindi

February 13, 2026 AT 02:00This hit home. I’m a junior and I used to just copy-paste AI code until it "worked." Then I got stuck for three days on a bug because I had no idea what the code even did.

Started using CoT last month. First time I asked "why this approach?" the AI pointed out a race condition I’d never heard of. I learned more in one day than in three weeks of tutorials.

It’s not about being slow. It’s about not being clueless. You’re not wasting time-you’re investing in never having to relearn this again.

Krzysztof Lasocki

February 13, 2026 AT 19:54Oh wow, so we’re back to "think before you act"? Groundbreaking. I thought we were supposed to be coding, not writing a thesis on why a for loop should iterate forward.

But hey, if you wanna spend 15 minutes watching an AI pretend to be a CS professor before you get a button to work… more power to you. I’ll be over here shipping features while you finish your epistemology essay.

Santhosh Santhosh

February 13, 2026 AT 22:32I’ve been using chain-of-thought for six months now and I’ve noticed something subtle but profound

It’s not just about catching bugs-it’s about changing how I think about problems

Before, I’d see a requirement and jump straight to implementation

Now, I pause and ask myself: what’s the underlying pattern here? What assumptions am I making?

The AI’s explanation forces me to articulate my own thinking, even if I don’t use its code

It’s like having a conversation with a smarter version of myself

I’ve started solving problems faster because I understand them deeper

And yes, it takes longer per task-but I’m making fewer mistakes, and I’m learning every time

It’s not a productivity hack-it’s a mindset shift

And if you’re not willing to slow down to understand, you’ll never outgrow being a code monkey

Veera Mavalwala

February 15, 2026 AT 01:41Let me tell you something sweet and spicy-chain-of-thought isn’t for the faint of heart or the lazy of mind

You want to be the kind of dev who gets called in when the system’s on fire? Then you better learn to ask "why" before you copy-paste

Some devs treat AI like a magic genie-"make it work" and walk away

But real engineers? They treat it like a brilliant but arrogant intern who needs to justify every damn line

That 18% of explanations with logical fallacies? Yeah, that’s the part where you grow up

Don’t just accept the explanation-question it like your job depends on it

Because someday, it will

And when that bug takes down a payment gateway at midnight-you’ll be glad you didn’t vibe code