Open Source in the Vibe Coding Era: How Community Models Are Shaping AI-Powered Development

Oct, 11 2025

Oct, 11 2025

It’s 2025, and coding doesn’t feel like typing anymore. It feels like talking to a teammate who knows your style, remembers your last five files, and doesn’t need coffee to stay sharp. This is the vibe coding era-where AI doesn’t just suggest code, it anticipates it, adapts to your workflow, and even fixes bugs before you notice them. And at the heart of this shift? Open source.

Forget the old image of open source as a bunch of volunteers fixing bugs in their spare time. Today, it’s a living, breathing ecosystem of developers, companies, and communities building the next generation of coding assistants. Models like DeepSeek-R1, Qwen3-Coder-480B, and Kimi-Dev-72B aren’t just technical breakthroughs-they’re cultural ones. They’re designed not just to perform, but to feel right.

What Does "Vibe Coding" Actually Mean?

"Vibe coding" isn’t a marketing buzzword. It’s what developers say when they stop thinking about the model and start thinking about their code. It’s when the AI doesn’t interrupt your flow. When it gets your naming conventions. When it understands that you’re refactoring a legacy Java module, not writing a new React component. It’s the difference between an autocomplete tool and a collaborator.

That vibe comes from three things: context, consistency, and customization. Models now handle up to 256,000 tokens in a single prompt-that’s enough to read an entire codebase at once. They’re trained on real-world code, not just textbooks. And because they’re open source, you can tweak them to match your team’s style, your company’s security rules, or even your favorite linter settings.

One developer on Reddit switched from Claude 3.5 to Qwen3-Coder-480B because their internal tooling relied on a proprietary framework. "The 480B version just got it," they wrote. "It didn’t guess-it understood."

The Rise of Specialized Open-Source Coding Models

Early open-source LLMs tried to do everything. Llama 3? Fine for chat. Okay for code. But not great at either. Llama 4, released in April 2025, was supposed to change that. It didn’t. Enterprise users reported 32% more errors in complex SQL generation compared to GPT-4. The documentation? Patchy. The benchmarks? Overhyped.

Meanwhile, specialized models exploded. DeepSeek-R1 didn’t try to be general-purpose. It focused on reasoning. Its reinforcement learning with verifiers cut code errors by 18% compared to its predecessor. Kimi-Dev-72B? Built for long-form editing. Its 256K context lets you refactor an entire microservice in one go. Qwen3-Coder-480B? The first open-source model designed from the ground up for agentic coding-where the AI writes, tests, and deploys code without human hand-holding.

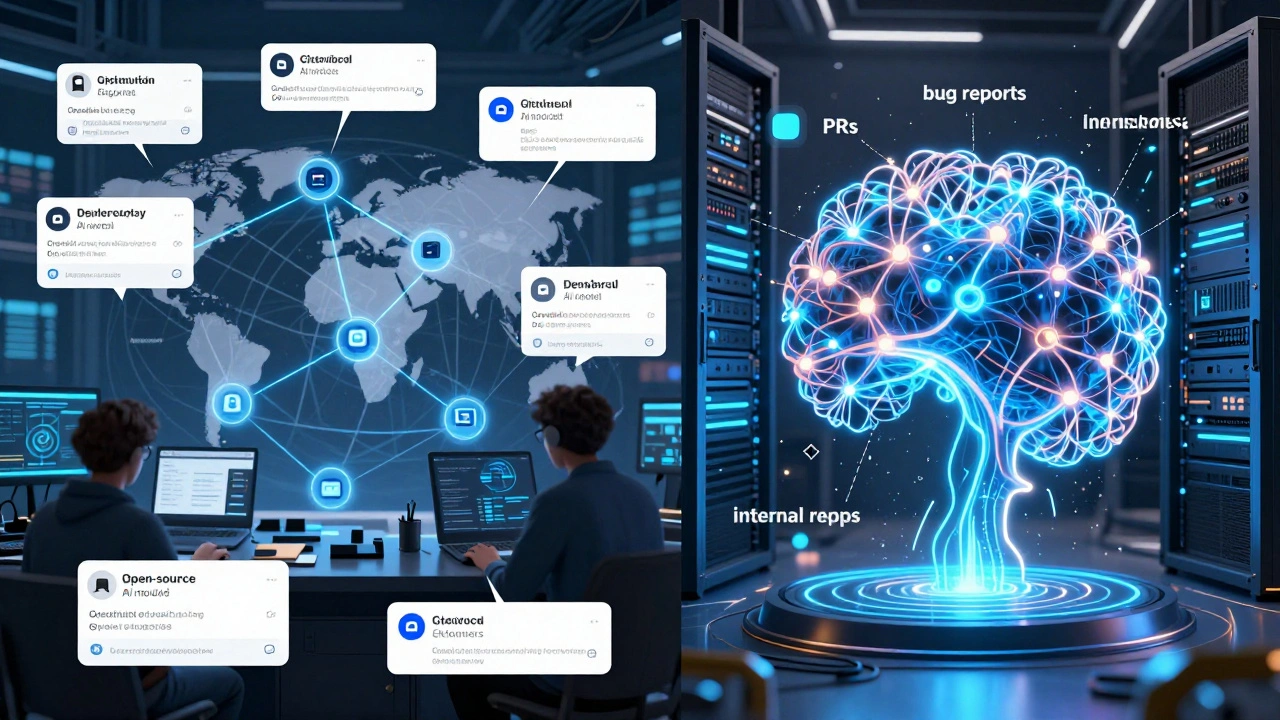

These aren’t just bigger models. They’re smarter architectures. They’re trained on different data: real PRs, internal repos, bug reports, commit messages. They don’t just generate code-they learn how teams work.

Why Open Source Still Wins on Customization

Here’s the truth: closed-source models like Claude 4 and GPT-4o are faster. They’re more consistent. They score higher on HumanEval benchmarks-sometimes by 15 percentage points. So why do 68% of developers still say customization is critical?

Because code isn’t just logic. It’s culture.

At a fintech startup in Berlin, the team needed their AI to follow strict compliance patterns for financial transactions. Closed-source models kept suggesting "fast but risky" optimizations. Open-source models? They were fine-tuned on their own internal codebase. The AI learned to reject any function that didn’t include audit logs. It started writing comments in German. It matched their code review checklist. That’s not a feature. That’s a necessity.

Open source lets you do that. You can quantize a 70B model to run on a single 24GB GPU. You can add your own dataset. You can lock it behind your firewall. You can even train it to avoid certain patterns-like using deprecated libraries or violating your company’s naming standards.

And the cost? No API bills. No surprise charges when your CI/CD pipeline runs 500 code generations an hour. For mid-sized companies (200-2,000 employees), that’s not a perk-it’s a lifeline.

The Community Is the New Engine

Open source didn’t get here by accident. It got here because communities showed up.

GitHub hosts over 1,200 fine-tuned coding models based on Llama 3. The top 10 variants outperform the base model by up to 18% on framework-specific tasks. One group in Tokyo fine-tuned a model to handle their legacy COBOL-to-Java migration. Another in São Paulo trained theirs to generate documentation in Portuguese. These aren’t corporate projects. They’re side hustles. Passion projects. Community-driven experiments that became production tools.

Meshery and LFX are now the new meetups. Meshery’s Discord server has over 12,000 developers sharing tips on deploying LLMs locally. LFX runs paid internships that pair new contributors with mentors who’ve fine-tuned models for healthcare apps or embedded systems. Documentation quality varies-Qwen3’s docs score 4.7/5 on GitHub. Llama 4’s? 3.2. But the community fills the gaps. Stack Overflow threads turn into GitHub issues. Reddit threads turn into pull requests.

This isn’t just collaboration. It’s collective intelligence. When one developer fixes a bug in a model’s Python code generation, thousands benefit. When someone adds support for a niche framework, it doesn’t stay hidden. It spreads.

The Real Trade-Offs: Performance vs. Control

Let’s be honest: open source isn’t always better. It’s just different.

Setting up a local LLM takes time. New developers report needing 1-2 weeks to get comfortable with Ollama, vLLM, or Hugging Face’s inference server. Quantizing a model to 4-bit saves memory but cuts accuracy by 8-12%. Some models handle Python beautifully but choke on Rust or SQL. One developer wrote: "I spent three days trying to get DeepSeek-R1 to generate valid Terraform. GPT-4 did it in one try."

And performance gaps still matter. In production, a 10% drop in code accuracy can mean dozens of bugs slipping into production. That’s why 66% of developers upgrade within their existing provider instead of switching vendors. Closed-source models are more reliable. They’re polished. They’ve got teams of engineers monitoring them 24/7.

But here’s the twist: open source doesn’t need to beat them. It just needs to be good enough-where it matters.

For internal documentation generators? Open source leads. For code review automation? 62% use open models. For legacy system modernization? Open source is the only option. For startups that can’t afford $50k/year in API fees? Open source is the only choice.

What’s Next: The Hybrid Future

The future isn’t open vs. closed. It’s hybrid.

Companies aren’t choosing one. They’re layering them. Use a closed-source model for complex logic, high-stakes queries, or customer-facing features. Use an open-source model for internal tooling, repetitive tasks, or domain-specific workflows.

Some teams run both. They feed the output of a closed-source model into an open-source one to check for compliance. Others use ensemble methods-running three open-source models in parallel and picking the most consistent answer. That’s brought the performance gap down from 22% to 15% in just six months.

Regulation is helping too. The EU AI Act’s May 2025 rules require transparency in training data. Open-source models win here-they publish their data sources, their training methods, their biases. Closed-source models? They don’t. That’s not just ethical. It’s becoming a legal advantage.

By 2027, open source will hold 25-30% of the coding AI market-not because it’s the best, but because it’s the only one that lets you own your tools. In healthcare, finance, defense, and embedded systems, that ownership isn’t optional. It’s mandatory.

How to Get Started Today

If you’re curious, here’s how to dip your toes in:

- Start small. Try Qwen3-Coder-7B on your laptop with Ollama. It runs on a MacBook with 16GB RAM.

- Use it for one task: generate unit tests for your existing code.

- Compare its output to Claude 3.5 or GPT-4. Notice the differences in style, not just accuracy.

- If it feels right, fine-tune it on your team’s codebase. Use Cake AI’s toolkit to simplify the process.

- Join Meshery’s Discord. Ask questions. Share your results.

You don’t need to be a machine learning engineer. You just need to care about how your code feels when it’s written.

Final Thought: The Vibe Is the Product

Open source in the vibe coding era isn’t about raw performance. It’s about trust. It’s about control. It’s about building tools that don’t just work-but feel like they were made for you.

That’s why, even as closed-source models get smarter, open source isn’t fading. It’s evolving. Not into a rival-but into a partner. A community. A shared space where developers don’t just use AI. They shape it.

And that’s the vibe that won’t go away.

Ian Maggs

December 9, 2025 AT 11:09It’s not just about code-it’s about the rhythm, the cadence, the unspoken agreement between developer and machine… You know? The way it knows you don’t want parentheses around single-line returns, or that you’ll never, ever use ‘var’ in JavaScript, even if the linter begs you… It’s not AI. It’s a mirror. And open source? It’s the only mirror that lets you grind your own glass.

saravana kumar

December 11, 2025 AT 01:46Let’s be brutally honest: 90% of these ‘vibe coding’ claims are marketing fluff wrapped in GitHub READMEs. DeepSeek-R1? Fine for toy projects. Try running it on a 2018 MacBook Air with 8GB RAM and see how ‘vibe’ you feel when it takes 14 minutes to generate a single function. GPT-4o still wins on raw reliability. Open source isn’t better-it’s just cheaper. And cheaper doesn’t mean better.

Tamil selvan

December 12, 2025 AT 12:58I appreciate the nuance in this post, and I’d like to gently add that the real triumph of open source isn’t in the models themselves-but in the dignity it restores to developers. When you can audit every token, tweak every hyperparameter, and know that no corporate policy will silently disable your favorite lint rule-you’re not just coding. You’re reclaiming agency. This isn’t a tool. It’s a right.

Mark Brantner

December 14, 2025 AT 12:29so i tried qwen3 on my pc… it made a function called ‘doTheThing()’ and then commented it ‘this does the thing’… i cried. not because it was bad… because it was… so… right? like it knew i’d been coding since 2012 and just wanted to get home. also i typoed ‘qwen’ as ‘qwenn’ and it still worked. magic? or just hype? i dont know but i’m hooked.

Kate Tran

December 15, 2025 AT 04:13My team switched to Qwen3-Coder-7B last month. We use it for docstrings and test stubs. It writes in British English now. I didn’t even tell it to. It just… picked up on our Slack tone. Weird? Maybe. But also kind of beautiful. I’m not ready to call it AI. I call it my quiet coworker who never steals the last biscuit.

amber hopman

December 15, 2025 AT 07:07I’ve been using DeepSeek-R1 for internal compliance checks. It’s not perfect, but it’s consistent-unlike GPT-4, which keeps suggesting ‘async/await’ in legacy Java services. We fine-tuned it on our audit logs and now it flags anything without a traceable ID. It’s not flashy, but it’s saved us 30 hours a week. Open source isn’t about being the fastest-it’s about being the most trustworthy. And that’s worth more than any API bill.

Jim Sonntag

December 16, 2025 AT 23:02Open source is the only reason I still have a job. Closed models? They’re like corporate interns-polite, polished, and utterly useless when you need them to understand your grandfather’s COBOL app. Meanwhile, some guy in Mumbai trained a model to auto-generate Jira tickets from his commit messages. No one paid him. He did it because he was bored. That’s the vibe. That’s the culture. And yeah, it’s messy. But it’s alive.

Deepak Sungra

December 18, 2025 AT 06:27Ok but let’s be real-this whole ‘vibe coding’ thing is just a fancy way of saying ‘my AI doesn’t make me want to quit coding.’ I’ve seen people cry because their GPT-4 kept suggesting ‘let’ instead of ‘const’ in React. Meanwhile, someone on Reddit fine-tuned a model to scream ‘NOPE’ in the terminal when it detects a deprecated API. That’s not engineering. That’s art. And I’m here for it. Also, I just spent 3 hours arguing with a model that refused to write SQL in snake_case. It’s a war. And I’m winning.