Reducing Hallucinations in Large Language Models: A Practical Guide for 2026

Jan, 26 2026

Jan, 26 2026

Large language models (LLMs) are powerful-but they lie. Not out of malice, but because they’re trained to sound convincing, not to be correct. You ask for the capital of Peru, and it confidently says "Bogotá." You ask for a patient’s medication dosage, and it invents a non-existent drug. These aren’t typos. They’re hallucinations: confident, fluent, and completely wrong. And they’re holding back real-world use of AI. A January 2024 study found that 78% of AI teams working in enterprises say hallucinations are their biggest barrier to deployment. That’s not a bug. It’s the system working as designed. The model doesn’t know what’s true. It knows what patterns look likely. And sometimes, that’s deadly. The good news? You can fix this. Not perfectly, but enough to make LLMs safe for customer service, medical advice, legal research, and financial reporting. Here’s how.

What Exactly Is an LLM Hallucination?

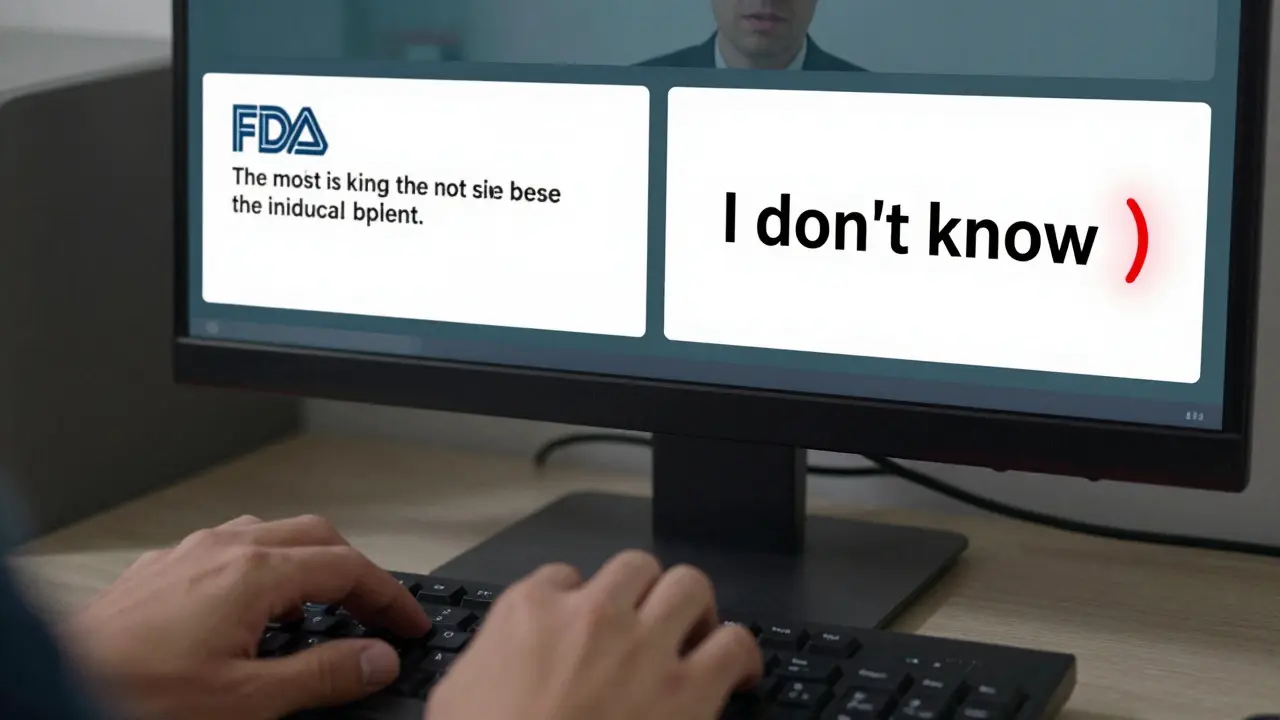

A hallucination isn’t just a mistake. It’s when the model generates something that sounds plausible, feels authoritative, and is entirely made up. It doesn’t say "I’m not sure." It says "The FDA approved X drug in 2021." When no such approval ever happened. Researchers break hallucinations into three types:- Factual: Wrong dates, names, numbers, or events. "The Eiffel Tower was built in 1920."

- Logical: Contradictions or impossible reasoning. "John is taller than Mary. Mary is taller than Tom. So John is shorter than Tom."

- Instructional: Ignoring your prompt. You asked for a summary, it writes a poem.

Use Prompt Engineering to Stop Hallucinations Before They Start

The easiest fix? Change how you ask. Most people treat prompts like casual questions. That’s like asking a surgeon to guess your diagnosis. You need precision. Here’s what works:- Lower the temperature. Set it between 0.2 and 0.5. Higher values (0.8+) make outputs creative but unpredictable. Lower values make them steady and safe. Tests show this cuts hallucinations by 32-45%.

- Use the ICE method. Microsoft’s team found this structure reduces hallucinations by 37%:

- Instructions: Start with clear rules. "Answer only using the provided context."

- Constraints: Add limits. "Do not invent facts. If unsure, say 'I don't know'."

- Escalation: Tell it what to do when it can’t answer. "If the context doesn’t contain the answer, respond with: 'I cannot answer this based on available information.'"

- Repeat key instructions. Saying "Do not make up facts" once? Useless. Say it twice or three times. Microsoft found this improves effectiveness by 15%.

- Use chain-of-thought prompting. Ask the model to think step-by-step. "Explain your reasoning before answering." This reduces hallucinations by 28% on models like Mistral-7B.

- Give examples. Show it what a good answer looks like. "Here’s a correct response: [example]. Now answer this question the same way." Few-shot examples cut hallucinations by 22%.

Retrieval-Augmented Generation (RAG) Is the Gold Standard

RAG is the most effective method for reducing hallucinations. It doesn’t rely on the model’s memory. It gives it facts to work with. Here’s how it works:- You ask a question.

- The system searches a trusted database-like your company’s product docs, medical journals, or legal statutes.

- It pulls the top 3-5 most relevant passages.

- The LLM uses only those passages to answer.

- Clean your data. Remove duplicates, outdated info, and jargon.

- Organize by topic. Don’t dump everything into one bucket. Separate "FDA regulations," "drug interactions," "billing codes."

- Use RAGAS to measure quality. This tool checks if answers match the retrieved text (answer correctness) and if they’re relevant. It correlates 0.87 with human judgment.

- Update sources weekly. Outdated info causes new hallucinations.

Fine-Tuning Works-If You Have the Resources

Fine-tuning means retraining the model on your own data. It’s powerful, but expensive. Microsoft found that fine-tuning a model on 10,000+ high-quality medical Q&A pairs cut hallucinations by 58%. That’s huge. But here’s the catch:- You need hundreds of hours of expert time to label data. Vectara says 200-300 hours for a single domain.

- You need GPU power. Training isn’t cheap.

- You need ongoing maintenance. As rules change, you retrain.

Post-Generation Fixes: Catching Lies After They’re Spoken

Sometimes, even the best prompts and RAG systems miss something. That’s where post-generation checks come in. Three proven methods:- Contrastive Decoding (CAD): Compares the model’s output against a version trained to avoid lies. Reduces hallucinations by 29%.

- Distributional Lookahead (DoLa): Predicts whether the next word is likely to be factual. Cuts errors by 33%.

- Factual alignment: Adjusts the model’s internal weights to favor truth over fluency. Achieves 41% reduction with no loss in response quality.

Human-in-the-Loop: When AI Needs a Supervisor

Some answers are too risky to trust to AI alone. Amazon Bedrock Agents lets you build workflows where:- If the AI’s confidence score is below 85%, it flags the answer.

- A human reviews it before sending to the customer.

- If it says "I don’t know," it’s automatically routed to support.

What’s Coming Next? The Future of Factuality

The field is moving fast.- Iterative self-reflection: The AI generates an answer, checks it against knowledge, then revises. Medical tests showed 52% hallucination reduction.

- Constitutional AI: Anthropic’s method builds truth rules directly into the model’s design. Early tests show 73% reduction.

- Knowledge graphs: Instead of plain text, the model queries structured databases of facts (e.g., "Aspirin → treats → headaches"). Methods like THAM reduced hallucinations by 51%.

- Multimodal checks: Google’s research shows cross-referencing text with images or tables can cut hallucinations by 65% by 2026.

What Should You Do Right Now?

You don’t need to do everything. Pick one path based on your needs:- For most teams: Start with ICE prompting + low temperature + RAG. Use clean, organized data. This covers 80% of cases.

- For regulated industries (healthcare, finance, legal): Add human review. Flag low-confidence answers. Train your team to spot lies.

- For internal tools with limited data: Try Knowledge Injection. It’s cheaper than full fine-tuning.

- For high-stakes automation: Combine RAG with factual alignment or post-editing.

- Using vague prompts like "Be helpful." That’s how hallucinations start.

- Feeding RAG messy, unvetted data. Garbage in, garbage out-even if it sounds smart.

- Believing fine-tuning is a quick fix. It’s not. It’s a long-term project.

- Ignoring latency. If your users notice delays, they’ll stop using the system.

Frequently Asked Questions

What causes LLMs to hallucinate?

LLMs hallucinate because they’re trained to predict the next word based on patterns, not facts. If a false statement appears often in training data or sounds plausible, the model will repeat it-even if it’s wrong. They don’t have memory or truth verification. They only know what’s likely, not what’s real.

Is RAG better than fine-tuning for reducing hallucinations?

Yes, for most use cases. RAG reduces hallucinations by 63-72% and doesn’t require massive datasets or training time. Fine-tuning can reduce hallucinations by up to 58%, but it needs 200-300 hours of expert annotation and expensive compute. RAG is faster, cheaper, and easier to update. Fine-tuning is only worth it if you have a stable, high-volume task with tons of labeled data.

Can I use free LLMs like Mistral or Llama to reduce hallucinations?

Yes, and you should. Models like Mistral-7B and Llama 3 perform just as well as expensive proprietary models when you use proper prompting and RAG. The model’s size matters less than how you control it. Free models can be fine-tuned, prompted, and augmented with your data just like paid ones.

How do I know if my LLM is hallucinating?

Use RAGAS or similar tools to measure answer correctness and relevance. Manually test with known facts: ask for a company’s founding date, a law’s exact wording, or a drug’s side effects. If the model answers confidently but incorrectly, it’s hallucinating. Set up automated checks that flag answers lacking source citations or contradicting your knowledge base.

Do all LLMs hallucinate the same way?

No. Larger models like GPT-4 or Claude 3 hallucinate less than smaller ones-but not by much if poorly prompted. A well-tuned Mistral-7B with RAG can outperform a poorly configured GPT-4. The model matters less than your control system. Prompting, retrieval, and verification are the real levers.

Will hallucinations ever be fully eliminated?

No. As models get better at reasoning, they’ll invent more sophisticated lies-like fake court rulings or invented scientific studies. The goal isn’t perfection. It’s reducing risk to acceptable levels. With RAG, prompting, and human oversight, you can get hallucinations below 5% in most enterprise uses. That’s safe enough to deploy.

Next Steps

- Start today: Rewrite your top 5 prompts using ICE + low temperature.

- Build a small knowledge base: 50 clean documents on your most common questions.

- Test RAG with one use case: customer support FAQs or internal HR policies.

- Measure before and after: Track how often your model says "I don’t know" and how often it gets facts wrong.