Customer Support Automation with LLMs: Routing, Answers, and Escalation

Dec, 18 2025

Dec, 18 2025

Imagine a customer service system that answers 80% of questions in under 15 seconds, routes the rest to the right human agent, and cuts your support costs by nearly half. That’s not science fiction-it’s what companies are doing today with Large Language Models (LLMs). But it’s not as simple as plugging in a chatbot. Getting LLMs to handle routing, answers, and escalation right takes careful design, real-world testing, and smart trade-offs.

How LLMs Actually Handle Customer Questions

Most people think AI customer support means a chatbot that repeats the same script. But modern LLM systems do something smarter. They don’t just respond-they analyze. When a customer types in a question, the system doesn’t jump straight to an answer. First, it figures out what kind of question it is. Is this a billing issue? A broken product? A frustrated user who just got charged twice? Each of these needs a different response strategy. Systems like those from LivePerson and Portkey.ai look at the first 4 to 6 messages in a conversation to detect intent. They don’t rely on keywords alone. They understand context. If someone says, “I’ve been waiting three days and now my order is canceled? This is ridiculous,” the model doesn’t just see “order canceled.” It picks up the emotion, the urgency, the history. That’s when it knows: this needs a human. Fast. For simple questions-“Where’s my package?” or “How do I reset my password?”-the system uses lightweight models like GPT-3.5. These are fast, cheap, and accurate enough for routine tasks. For more complex issues-like troubleshooting a software bug or explaining a contract clause-it switches to GPT-4 or a fine-tuned version trained on your product docs. The result? Faster replies for easy stuff, better answers for hard stuff.Routing Isn’t Just About Where to Send It-It’s About Which Model to Use

Static routing is the old way: all billing questions go to Model A, all tech questions go to Model B. Simple, but wasteful. You’re using a Ferrari to deliver a letter when a bicycle would do. Dynamic routing changes that. It looks at the question, assesses its complexity, and picks the right model on the fly. A Shopify merchant using this approach found that 37% of their queries were simple billing questions. Those got routed to a small, fast model with 98% accuracy. Product troubleshooting-42% of queries-went to a general-purpose LLM. And the 8% of conversations with clear signs of anger or confusion? They were flagged for human agents before the AI even tried to respond. This isn’t guesswork. Companies like AWS and Portkey.ai track performance in real time. If a model keeps failing on a certain type of question, the system learns. It adjusts. After two weeks of live data, one logistics company improved routing accuracy by 22% just by letting the system adapt to real customer language. The cost savings are real. Using GPT-4 for every single query would be expensive. But by routing only half of queries to it-and the rest to cheaper models-companies cut infrastructure costs by 40%, while keeping 92% of the quality. That’s the sweet spot.When the AI Gets It Wrong-And How to Fix It

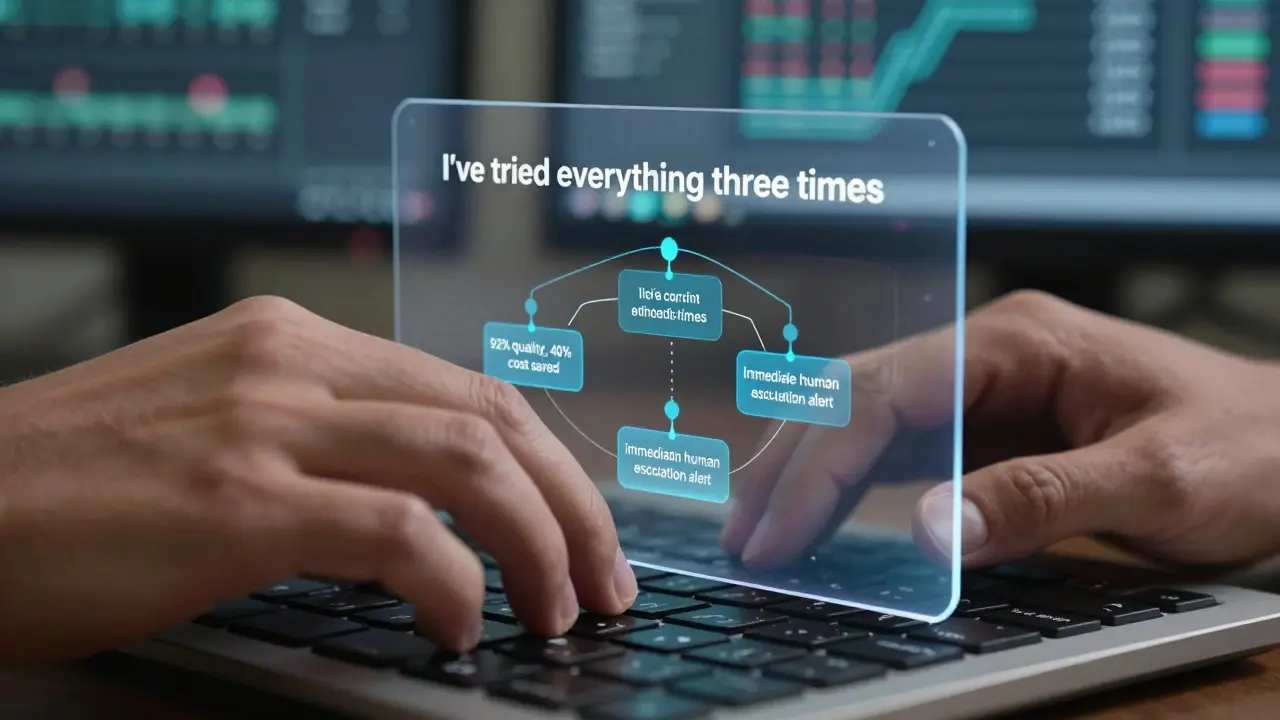

No system is perfect. The biggest failure point? Emotional context. Customers don’t always say, “I’m upset.” They say, “I’ve tried everything,” or “This is the third time I’ve called.” LLMs can miss these cues. MIT’s AI Ethics Lab found that when systems fail to detect frustration, customer dissatisfaction jumps by 22%. That’s why every good LLM support system has mandatory escalation triggers. These aren’t just keywords. They’re patterns:- Repeated use of words like “again,” “still,” or “never”

- Multiple short, angry messages in a row

- References to past failed attempts

- Time spent typing without sending-indicating hesitation or distress

Implementation: What You Really Need to Know

You can’t just buy a tool and turn it on. Implementation takes time, and it’s not just technical-it’s cultural. Start with data. Gather 5,000-10,000 real customer interactions. These aren’t sample scripts. They’re actual chats, emails, support tickets. Feed them into the model. Fine-tune it on your product, your tone, your common issues. A retail company skipped this step and ended up with an AI that kept suggesting refunds for non-refundable items. Cost them $47,000 in a month. Next, integrate. Most businesses use CRM tools like Salesforce or Zendesk. Connecting the LLM to those isn’t plug-and-play. It requires API work, data mapping, and testing. Sixty-three percent of companies report this as the hardest part. If you don’t have a developer or a technical partner, you’ll hit walls. Then, test. Run the system in parallel with human agents for two weeks. Compare response times, resolution rates, and customer satisfaction scores. Don’t launch until the AI matches or beats human performance on routine tasks. Finally, monitor. Set up alerts for misrouted queries. Track which models fail most often. Re-train monthly. This isn’t a “set it and forget it” tool. It’s a living system.Who’s Doing This Right-and Who’s Not

E-commerce leads the pack. Shopify merchants using LLM routing handle 1,200 daily queries across 17 languages with 83% first-response accuracy. That’s impossible with human agents alone. Financial services are catching up. Banks now use LLMs to explain fee structures, track transaction disputes, and even guide customers through fraud reports-all with strict compliance controls built in. But failure stories are common too. One travel company automated its refund requests. The AI kept approving refunds for canceled flights that weren’t eligible. Customers got angry. The company had to shut it down. The difference? The winners trained their models on real, messy data. The losers used generic templates.

Sarah McWhirter

December 20, 2025 AT 03:08So let me get this straight - we’re letting an AI decide who gets human help based on how many times someone types ‘again’? What if I’m just really bad at English? Or if I’m crying and can’t type well? What if the algorithm thinks my grief is ‘low urgency’ because I didn’t use caps? I’ve seen this before. First they automate the help desk, then they automate the empathy. Next thing you know, your grandma’s Medicare appeal gets routed to a bot that says ‘I’m sorry you feel that way’ and logs it as ‘resolved.’ We’re not building tools. We’re building ghosts in the machine… and they’re learning to ignore us.

Ananya Sharma

December 22, 2025 AT 00:58Let’s be honest - this entire ‘LLM routing’ paradigm is just corporate laziness dressed up as innovation. You’re not ‘freeing up agents’ - you’re offloading emotional labor onto customers who now have to navigate five layers of AI before someone with a pulse even acknowledges they exist. And let’s not pretend GPT-4 is ‘better’ at understanding context - it’s just better at hallucinating plausible-sounding nonsense that sounds like empathy. I’ve seen customers spend 27 minutes arguing with a bot that kept insisting their refund was ‘processed’ when it was clearly stuck in a loop. The real cost savings? The ones you’re not counting: the mental health toll on customers, the erosion of trust, and the fact that now no one remembers how to solve problems without a chat window. This isn’t progress. It’s systemic avoidance.

kelvin kind

December 22, 2025 AT 19:54Been using something similar at work. Works great for returns and password resets. Still gotta have humans for the messy stuff. Simple.

Ian Cassidy

December 23, 2025 AT 10:53The dynamic routing architecture here is actually pretty elegant - leveraging lightweight LLMs like GPT-3.5 for high-volume, low-complexity intents while offloading high-stakes, context-heavy queries to fine-tuned GPT-4 variants reduces token expenditure by ~40% without significant degradation in NPS. The real win is the feedback loop: real-time misrouting telemetry feeds back into the classifier, enabling online learning without retraining the entire pipeline. That’s scalable.

That said, the emotional detection layer still relies on heuristic triggers - ‘again,’ ‘still,’ etc. - which are brittle. A better approach would be embedding sentiment vectors from transformer-based affective models like SentBERT or EmoRoBERTa to detect latent frustration patterns, not just lexical repetition.

Zach Beggs

December 24, 2025 AT 17:34Love the part about training on real data. We did the opposite and our bot kept telling people to ‘reset their account’ when they were asking about shipping delays. Total disaster. Learned the hard way - real examples matter more than perfect scripts.

Kenny Stockman

December 24, 2025 AT 17:41Biggest thing people forget: this tech doesn’t replace people - it redeems them. I used to be a support rep. 80% of my day was explaining how to click ‘reset password.’ Now? I get to help someone whose dog passed away and their subscription auto-renewed. That’s the work that matters. The bots took the boring stuff. That’s not evil - it’s human.

Antonio Hunter

December 25, 2025 AT 20:58I want to emphasize something subtle here: the cultural shift required for this to work isn’t just technical - it’s psychological. Teams that succeed with LLM integration aren’t the ones that just deploy the tool; they’re the ones that reframe their identity. Support agents stop seeing themselves as ‘problem solvers’ and start seeing themselves as ‘meaning facilitators.’ That’s a profound change. It requires training, yes, but also leadership that validates the emotional weight of the work that remains. Without that, you end up with burned-out staff who feel like they’re just cleaning up after the AI’s mistakes. The real ROI isn’t in cost savings - it’s in restoring dignity to the work.

Paritosh Bhagat

December 27, 2025 AT 13:01Wow. Just… wow. You people are seriously okay with this? Letting machines decide who gets help? I mean, really? You think a bot can tell the difference between someone who’s frustrated and someone who’s literally in crisis? I’ve seen it - people with mental health issues get stuck in loops because the AI doesn’t recognize ‘I can’t do this anymore’ as a cry for help. It just sees ‘repeated messages’ and escalates. But by then? It’s too late. And you call this innovation? This is negligence wrapped in buzzwords. And don’t even get me started on how companies use ‘we trained on real data’ as an excuse to scrape private chats without consent. GDPR? More like GDRP - ‘Give Data, Really Poor.’ You’re not building systems. You’re building traps. And you’re all complicit.