AI Coding Security: Protecting AI-Generated Code from Risks and Exploits

When you use AI coding security, the practices and tools that prevent malicious exploits in code generated by artificial intelligence. Also known as secure AI development, it’s not just about writing clean code—it’s about making sure the AI itself doesn’t become a backdoor for hackers, leaks, or broken systems. Every time your team uses GitHub Copilot, ChatGPT, or any AI coding assistant to generate a script, API, or form, you’re inviting an invisible risk: the AI might copy vulnerable patterns, leak sensitive data in prompts, or open up paths for prompt injection, a type of attack where malicious input tricks an AI into revealing secrets or running harmful code. This isn’t theoretical. Nonprofits handling donor data, client records, or health info are prime targets—and they often have fewer guards up than big tech firms.

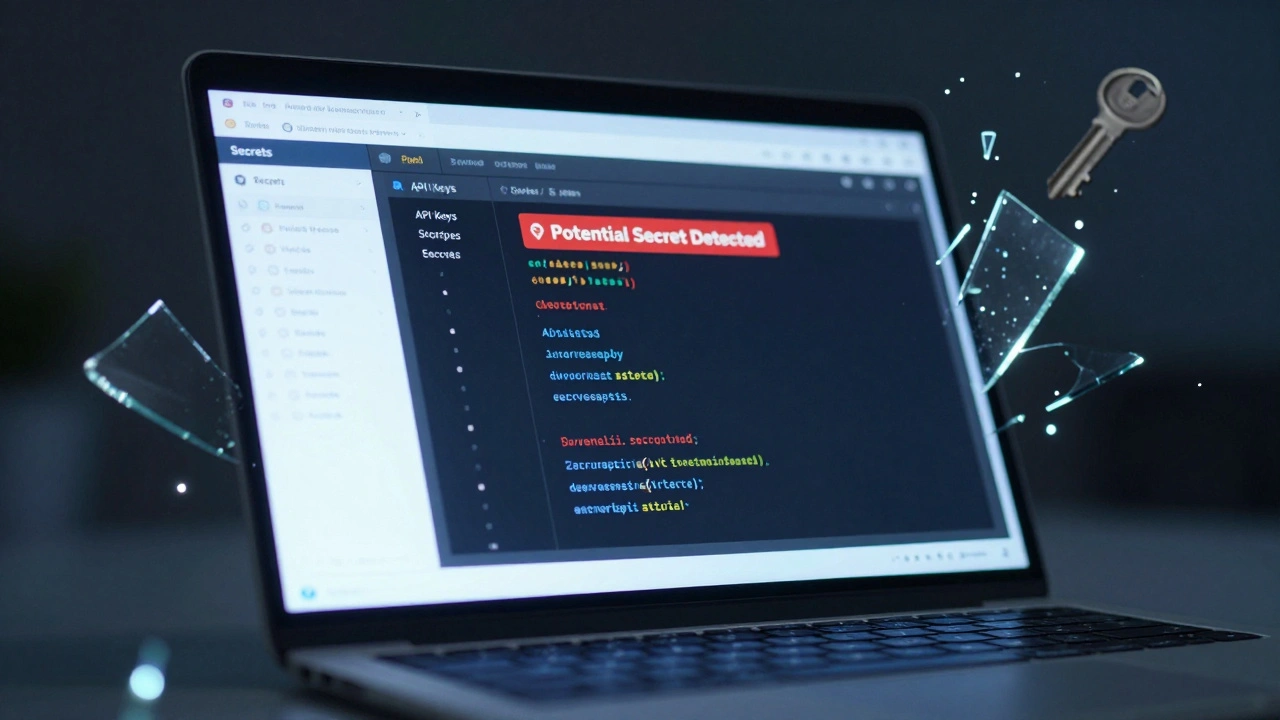

LLM incident management, a system for catching and responding to failures in large language models before they cause harm is your first line of defense. AI-generated code doesn’t crash like traditional software—it slips through quietly. A single line of AI-written SQL could expose your entire donor database. A poorly vetted API endpoint might let outsiders scrape private user info. That’s why you need processes to scan, log, and respond to these subtle threats. Tools like AI coding assistants, tools like GitHub Copilot or JetBrains AI Assistant that generate code from natural language prompts are powerful, but they don’t come with built-in security. You have to add it yourself: restrict what data the AI can see, audit outputs before deployment, and train your team to spot red flags like unusual API calls or hardcoded keys.

And it’s not just about code. The real danger lies in the gap between what AI can do and what your team understands. If your fundraiser uses vibe coding to build a donation form, they shouldn’t need to know Python to know that the AI shouldn’t be allowed to access your CRM. That’s where policies, not just tools, matter. Simple rules—like never feeding PHI into prompts, always reviewing generated code for hardcoded secrets, or using sandboxed environments for testing—can cut your risk in half. The posts below show you exactly how other nonprofits are doing this: from detecting prompt injections in real time, to setting up guardrails for AI tools, to running security checks on generated code before it ever goes live. You don’t need a team of engineers to stay safe. You just need the right habits—and the right guidance.

Security Basics for Non-Technical Builders Using Vibe Coding Platforms

Non-technical builders using AI coding tools like Replit or GitHub Copilot must avoid hardcoded secrets, use HTTPS, sanitize inputs, and manage environment variables. These five simple rules prevent 90% of security breaches in vibe-coded apps.

Read More