Secret Management for Nonprofits: Secure AI Tools and Practices

When your nonprofit uses AI tools—whether for fundraising, donor outreach, or program automation—you’re handling secret management, the practice of securely storing and controlling access to sensitive credentials like API keys, passwords, and encryption tokens. Also known as credential security, it’s not just a tech task—it’s a mission-critical responsibility. If an AI tool gets compromised, donor data, financial records, or even program outcomes could be exposed. And for nonprofits, that’s not just a risk—it’s a betrayal of trust.

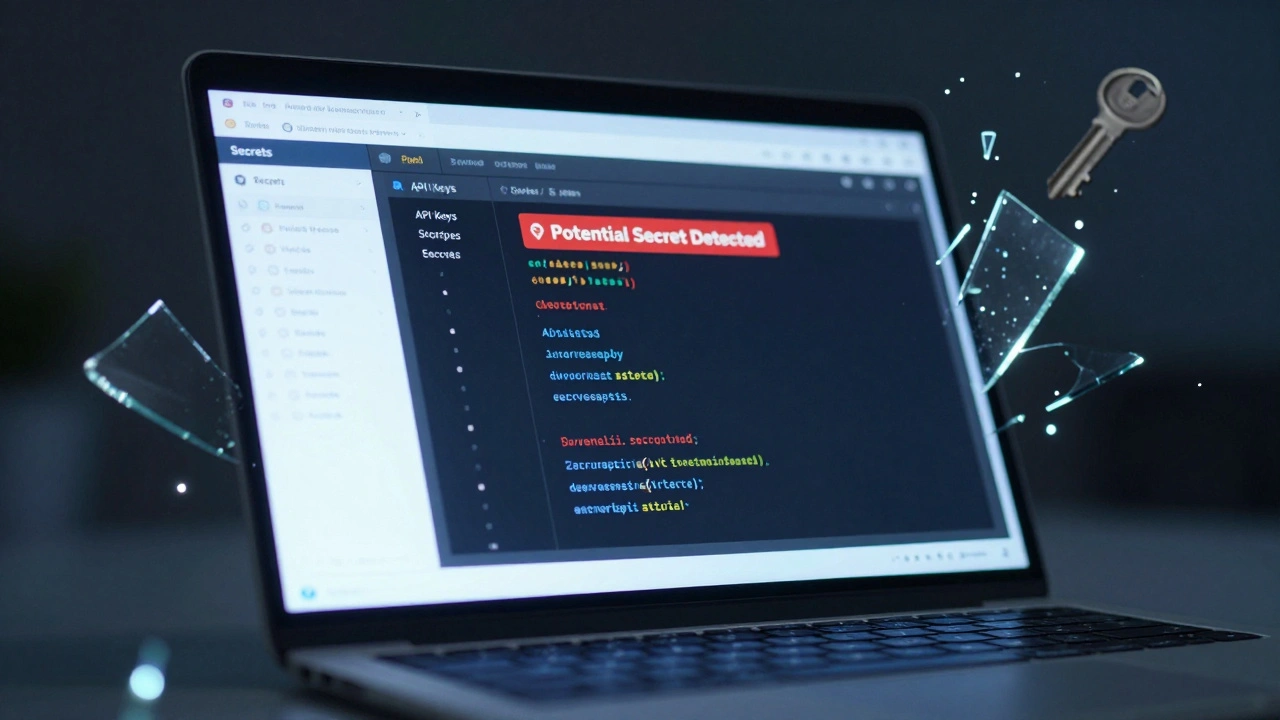

Secret management isn’t about fancy firewalls or expensive software. It’s about simple, repeatable habits: never hardcoding passwords into scripts, using encrypted vaults for API keys, and limiting who can access what. For example, if you’re using a language model to draft grant proposals, that model needs an API key. That key shouldn’t live in a Google Doc titled ‘AI Keys.’ It should sit in a secure vault like HashiCorp Vault or AWS Secrets Manager, with access granted only to the people who absolutely need it. The same goes for your donor database integrations, payment processors, or any third-party AI tool your team uses. API keys, unique identifiers that let software services talk to each other securely. Also known as access tokens, they’re the digital keys to your nonprofit’s most valuable systems. Lose one, and you lose control.

Many nonprofits skip proper secret management because they think, ‘We’re too small to be targeted.’ But attackers don’t care if you’re big or small—they care if you’re easy. A leaked API key in a public GitHub repo is a common entry point. And once they’re in, they can drain your fundraising platform, send phishing emails pretending to be you, or even train AI models on your donor data. That’s why LLM security, the practice of safeguarding large language models from prompt injection, data leaks, and unauthorized access. Also known as AI safety protocols, it’s a core part of responsible AI use in nonprofits. It’s not enough to just use AI. You have to use it safely. That means rotating keys regularly, auditing access logs, and training your team to treat credentials like passwords to their bank account—because in many ways, they are.

What you’ll find below are real guides from nonprofits who’ve been there. They’ve locked down their AI tools, stopped data leaks before they happened, and built systems that keep their mission safe—not just their data. You’ll learn how to set up secret management without a full-time IT team, how to spot a compromised key, and which tools actually work for small teams with tight budgets. No fluff. No jargon. Just what you need to protect what matters most.

Security Basics for Non-Technical Builders Using Vibe Coding Platforms

Non-technical builders using AI coding tools like Replit or GitHub Copilot must avoid hardcoded secrets, use HTTPS, sanitize inputs, and manage environment variables. These five simple rules prevent 90% of security breaches in vibe-coded apps.

Read More