AI for Nonprofits in August 2025: Tools, Ethics, and Real-World Impact

When AI for nonprofits, artificial intelligence tools designed specifically to help mission-driven organizations work smarter, not harder. Also known as nonprofit AI, it isn’t about replacing people—it’s about giving teams more time to focus on what matters: the people they serve. In August 2025, nonprofits across the U.S. and beyond started using AI not as a buzzword, but as a daily tool—like email or spreadsheets. They weren’t chasing fancy tech. They were solving real problems: too many donor emails to reply to, slow grant applications, volunteers who didn’t know where to start, and data that was messy or unsafe.

Nonprofit AI tools, practical software designed for organizations with limited budgets and technical staff. Also known as nonprofit-friendly AI, these tools don’t need a data scientist to run them. That month, organizations used AI to auto-sort donor inquiries by urgency, summarize feedback from community surveys in seconds, and even predict which fundraising campaigns would likely hit their goals based on past behavior. One small food bank cut their volunteer scheduling time by 70% using a simple AI calendar that learned from past shifts. Another used AI to rewrite grant proposals in plain language—cutting their rewrite time from days to minutes.

Responsible AI, the practice of using artificial intelligence in ways that protect privacy, avoid bias, and respect the communities nonprofits serve. Also known as ethical AI for nonprofits, it became non-negotiable in August 2025. After a few high-profile cases where AI misread donor demographics and sent tone-deaf messages, organizations doubled down on training. Teams started asking: Who made this tool? Is it tested on diverse populations? Can we explain how it made a decision? Templates for AI audits went viral in nonprofit Slack groups. You couldn’t buy an AI tool anymore without seeing a clear ethics statement—and most leaders refused to use anything without one.

AI fundraising, using artificial intelligence to personalize outreach, predict donor behavior, and automate follow-ups without losing the human touch. Also known as smart fundraising, it’s not about spamming people with messages. In August, nonprofits saw real gains: email open rates jumped 35% when AI helped tailor subject lines to donor history. One youth organization used AI to identify lapsed donors who were likely to return—and reached out with a personal video message from a teen they’d helped. No templates. No bots. Just real connection, powered by smart tech.

And then there’s AI operations, how nonprofits use artificial intelligence to handle internal tasks like scheduling, reporting, document sorting, and budget tracking. Also known as back-office AI, it’s the quiet hero behind the scenes. Staff no longer spent hours copying data from spreadsheets into grant portals. AI did it. Reports that used to take a week to compile were ready in an hour. Teams stopped drowning in paperwork and started having actual conversations with the people they served.

What you’ll find in this archive isn’t theory. It’s what actually happened in August 2025. Real stories from small nonprofits with tight budgets. Tools that worked. Mistakes that cost time. Lessons learned the hard way. No fluff. No hype. Just the practical, messy, human truth about how AI is changing nonprofit work—today, not someday.

Compression for Edge Deployment: Running LLMs on Limited Hardware

Learn how to run large language models on smartphones and IoT devices using model compression techniques like quantization, pruning, and knowledge distillation. Real-world results, hardware tips, and step-by-step deployment.

Read MoreThird-Country Data Transfers for Generative AI: GDPR and Cross-Border Compliance in 2025

GDPR restricts personal data transfers to third countries unless strict safeguards are in place. With generative AI processing data globally, businesses face real compliance risks - and heavy fines. Learn what you must do in 2025 to stay legal.

Read MoreEthical Guidelines for Deploying Large Language Models in Regulated Domains

Ethical deployment of large language models in healthcare, finance, and justice requires more than good intentions. It demands continuous monitoring, cross-functional oversight, and domain-specific safeguards to prevent harm and ensure accountability.

Read MoreScaling for Reasoning: How Thinking Tokens Are Rewriting LLM Performance Rules

Thinking tokens are changing how AI reasons - not by making models bigger, but by letting them think longer at the right moments. Learn how this new approach boosts accuracy on math and logic tasks without retraining.

Read MoreModel Lifecycle Management: Versioning, Deprecation, and Sunset Policies Explained

Learn how versioning, deprecation, and sunset policies form the backbone of responsible AI. Discover why enterprises use them to avoid compliance failures, reduce risk, and ensure model reliability.

Read MoreWhen Vibe Coding Works Best: Project Types That Benefit from AI-Generated Code

AI-generated code works best for repetitive tasks like forms, APIs, tests, and UI components - not for security-critical or complex logic. Learn which projects benefit most from vibe coding.

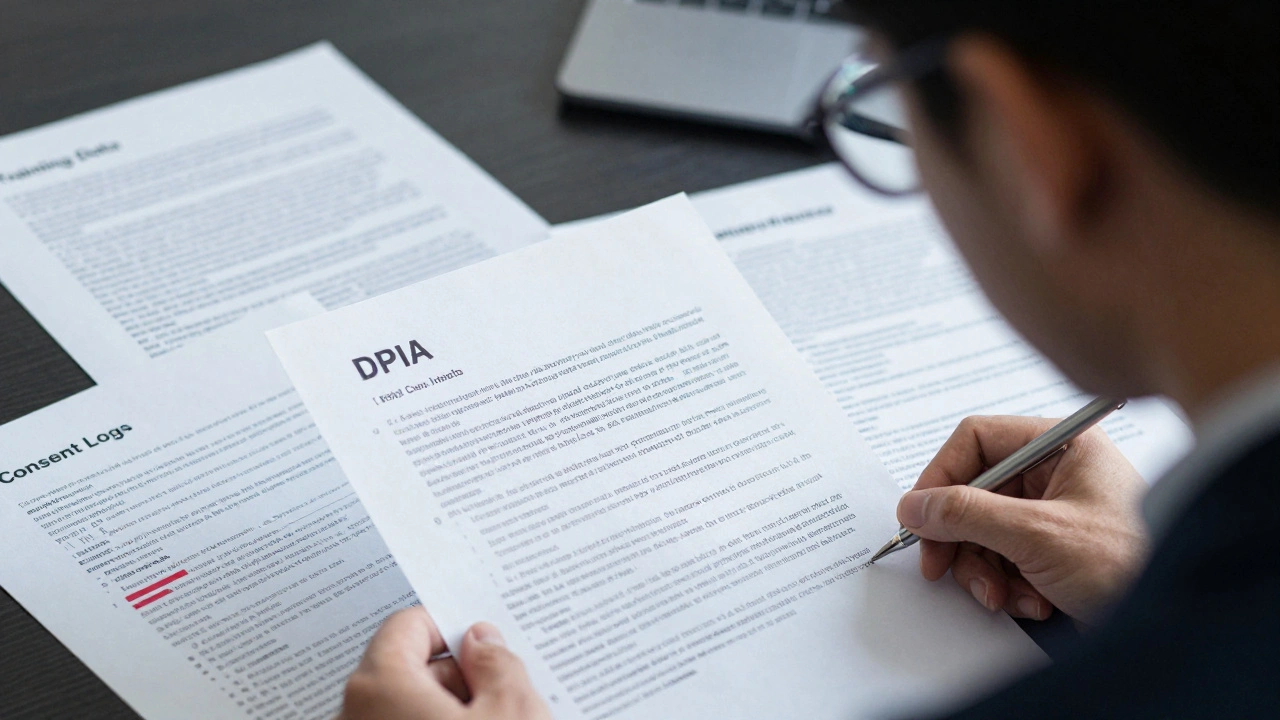

Read MoreImpact Assessments for Generative AI: DPIAs, AIA Requirements, and Templates

Generative AI requires strict impact assessments under GDPR and the EU AI Act. Learn what DPIAs and FRIAs are, when they're mandatory, which templates to use, and how to avoid costly fines.

Read More